Data science and machine learning are increasingly becoming fundamental competencies for addressing intricate real-world challenges, driving industry transformations, and delivering significant value across diverse domains.

As a result, numerous enterprises are making substantial investments in their data science teams and machine learning capabilities to implement predictive models capable of generating tangible business value for their users.

While data scientists can develop and train machine learning models with impressive predictive performance using relevant training data and offline holdout datasets, the true challenge lies in constructing an integrated machine learning system and ensuring its continuous operation in a production environment.

This is where the continuous integration and continuous delivery world coincides with the Machine Learning world and Machine Learning Operations (MLOps) comes into the picture.

What is Machine Learning Operations (MLOps) ?

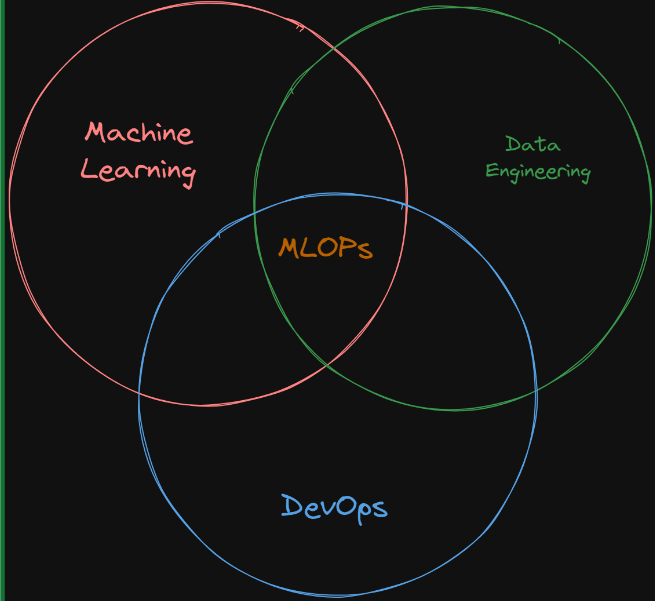

Machine Learning Operations (MLOps), refers to the set of practices and techniques aimed at streamlining the development, deployment, and management of machine learning models in production environments. It combines principles from machine learning, and DevOps to enable efficient and reliable machine learning lifecycle management.

MLOps focuses on automating and standardizing processes related to data acquisition, model training, deployment, monitoring, and maintenance. It involves establishing robust pipelines and workflows that facilitate collaboration between data scientists, software engineers, and operations teams.

By implementing MLOps practices, organizations can ensure the reproducibility, scalability, and reliability of machine learning models, ultimately driving the successful integration of machine learning into business operations.

MLOps encompasses a range of activities, including version control of models and data, automated testing, continuous integration and deployment, infrastructure management, monitoring of deployed models, and feedback loops for model improvement. It aims to address challenges such as reproducibility, model drift, data drift, and performance monitoring that arise when transitioning machine learning models from development to production.

MLOps vs DevOps ?

DevOps and MLOps are two disciplines aimed at improving efficiency and reliability in software development and machine learning operations, respectively. While they share some similarities, they have key differences that stem from the unique challenges posed by machine learning workflows.

DevOps focuses on streamlining the software development lifecycle (SDLC) by promoting collaboration between development and operations teams. It aims to automate software delivery processes, ensure rapid feedback loops, and foster a culture of continuous integration and deployment. DevOps primarily manages code, configurations, and infrastructure, with a focus on version control, automated testing, and managing deployment pipelines.

MLOps addresses the challenges of deploying and managing machine learning models in production environments. It combines machine learning, software engineering, and operations principles to facilitate the end-to-end lifecycle of ML systems. MLOps places significant emphasis on data management, including preprocessing, versioning, and ensuring data quality. It also focuses on model management, including version control, tracking, and deployment.

DevOps pipelines typically involve building, testing, and deploying software applications, while MLOps pipelines are more intricate due to the nature of machine learning workflows. MLOps encompasses stages such as data collection, preprocessing, model training, evaluation, deployment, and monitoring. It also incorporates model monitoring, measuring performance metrics, and triggering retraining and updates based on performance degradation or new data.

In terms of skill sets and collaboration, DevOps requires collaboration between developers and operations teams, while MLOps demands collaboration between data scientists, software engineers, and operations teams. DevOps engineers possess a blend of software development, system administration, and automation skills. MLOps practitioners need expertise in machine learning algorithms, data engineering, and model deployment.

In summary, DevOps focuses on optimizing software development processes, while MLOps specializes in managing machine learning models in production. DevOps primarily handles code and infrastructure, while MLOps adds complexities related to data management, model monitoring, and continuous retraining. Both disciplines contribute to enhancing operational efficiency, reliability, and collaboration within their respective domains, driving innovation and value delivery in organizations.

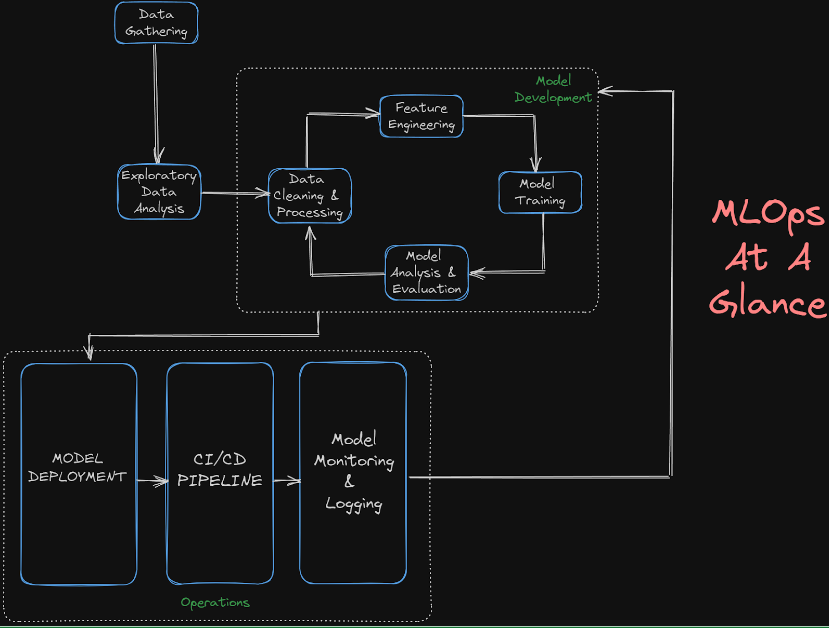

Components Of Machine Learning Pipeline

To achieve effective continuous delivery in MLOps, it is essential to understand the key components of an ML pipeline.

These components include:

Data Collection and Preparation: High-quality data is crucial for training robust ML models. Data collection involves gathering relevant data from various sources, while data preparation focuses on cleaning, transforming, and preprocessing the data to make it suitable for model training.

Model Training and Evaluation: This stage involves selecting appropriate ML algorithms, training the models using labelledlabeled data, and evaluating their performance against predefined metrics. It is important to track and version models during this process for reproducibility and traceability.

Model Deployment: Once a model is trained and validated, it needs to be deployed into production. This step involves containerizing the model, creating deployment artefactsartifacts, and setting up the necessary infrastructure to serve predictions.

Continuous Integration and Testing: Continuous integration ensures that changes made to the ML pipeline are regularly merged and validated. Automated testing, including unit tests, integration tests, and performance tests, helps detect any issues early in the development cycle.

Monitoring and Feedback: Continuous monitoring of deployed models allows organizations to detect anomalies, measure performance, and collect feedback from users. This feedback loop helps improve the models over time and enables proactive maintenance.

Best Practices For Continuous Delivery In MLOps

To achieve successful continuous delivery in MLOps, consider the following best practices:

Version Control: Track and manage code, data, and model versions to ensure reproducibility and traceability.

Automated Testing: Implement a comprehensive suite of tests to validate the functionality and performance of ML models and pipelines.

Infrastructure as Code: Use infrastructure automation tools (e.g., Docker, Kubernetes) to define and manage the deployment environment consistently.

Continuous Integration: Integrate changes made by data scientists and developers regularly, ensuring that the pipeline is stable and functional.

Continuous Deployment: Automate the deployment process to rapidly and reliably release models into production.

Monitoring and Alerting: Set up monitoring systems to detect anomalies in model performance, data drift, and infrastructure health.

Challenges In MLOps

Implementing MLOps practices isare accompanied by several challenges that need to be addressed. Some of these challenges include:

Data Management and Quality: Ensuring high-quality data for training and deploying ML models is a critical challenge. Data acquisition, preprocessing, cleaning, and maintaining data integrity can be complex tasks, particularly when dealing with large-scale and diverse data sources.

Reproducibility and Versioning: Reproducing ML experiments and ensuring consistent results across different environments can be challenging. Managing version control for models, code, and data is crucial to enable reproducibility and traceability.

Scalability and Infrastructure: Scaling ML models and infrastructure to handle large volumes of data and high traffic can be a significant challenge. Organizations need to design and manage robust and scalable infrastructure, including distributed computing, storage, and deployment platforms.

Model Monitoring and Maintenance: Monitoring the performance of deployed ML models and detecting anomalies or degradation over time is crucial. Model maintenance, including retraining, updating, and addressing concept drift, is an ongoing challenge to ensure the continued effectiveness of models in dynamic production environments.

Collaboration between Teams: Effective collaboration between data scientists, software engineers, and operations teams can be challenging due to differing skill sets, terminology, and priorities. Establishing clear communication channels, aligning objectives, and fostering cross-functional collaboration isare essential for successful MLOps implementation.

Regulatory and Ethical Considerations: Compliance with data privacy regulations and ethical considerations surrounding the use of sensitive data can pose challenges in MLOps. Organizations need to implement processes and safeguards to ensure the legal and ethical use of data throughout the ML lifecycle.

Continuous Learning and Improvement: Promoting a culture of continuous learning and improvement within the MLOps framework is crucial. Encouraging feedback loops, fostering experimentation, and incorporating user feedback can help organizations enhance their ML models and processes over time.

Addressing these challenges requires a combination of technical expertise, organizational alignment, and continuous improvement. By understanding and actively mitigating these challenges, organizations can achieve successful implementation and operation of MLOps practices, leading to more effective and efficient deployment of ML models in production environments.

Conclusion

MLOps is a critical discipline for organizations looking to leverage the full potential of machine learning in their operations. Continuous delivery and automation pipelines are key aspects of MLOps, enabling seamless integration and deployment of ML models.

By adopting best practices such as version control, automated testing, infrastructure automation, and continuous integration and deployment, organizations can achieve efficient and reliable ML pipeline management.

Embracing MLOps practices not only enhances the productivity and collaboration between data scientists and operations teams but also ensures the consistent delivery of high-quality ML models to production environments. With MLOps, organizations can scale their ML initiatives and drive innovation in a more agile and sustainable manner.

Add comment