In the era of data-driven decision-making, deploying machine learning models has become a fundamental skill for data scientists and machine learning engineers. It’s the process that allows your models to be utilized in practical, real-world applications, making them accessible to end-users through web services or APIs. This article will walk you through deploying a machine learning model using Flask, a Python web framework known for its simplicity and elegance. By the end, you’ll understand how to take a model from a development environment and deploy it into a production-ready web application.

Deployment of Machine Learning Models: A Basic Overview

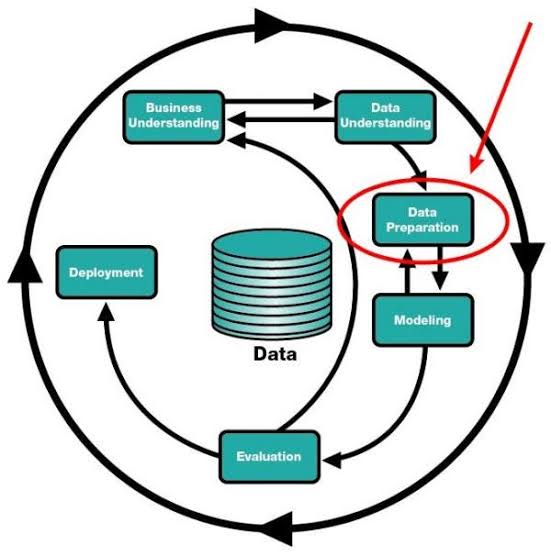

The journey from training a machine learning model to deploying it in a production environment is often underestimated. While the focus is usually on model accuracy and performance during the training phase, deployment is what actually transforms a model into an invaluable asset for making predictions in real-time applications. Flask, with its minimalistic yet powerful approach, provides an ideal platform for beginners and professionals to deploy machine learning models efficiently.

Prerequisites

Before we dive in, ensure you have:

- A basic understanding of Python programming.

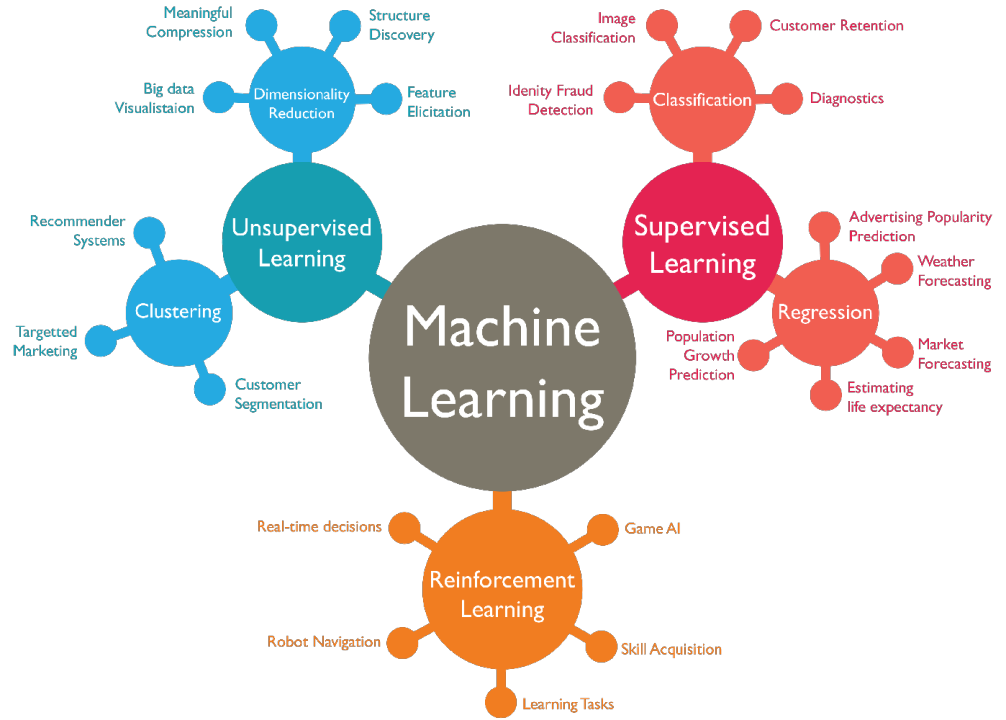

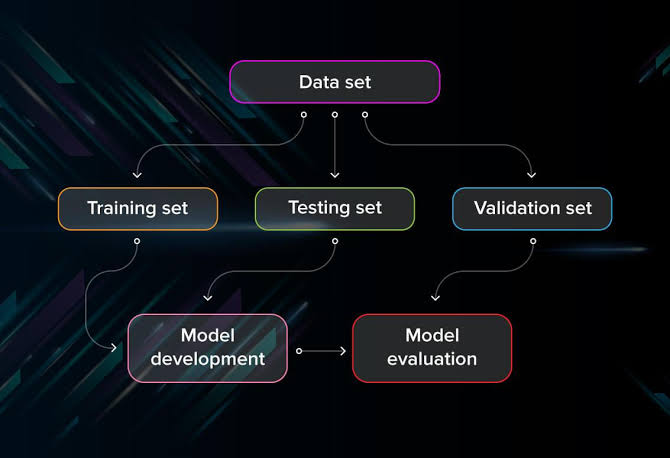

- Familiarity with machine learning concepts and a trained model at hand.

- Flask installed in your Python environment (pip install flask).

- Postman or any API testing tool for testing your deployment.

All set? Let us get started then!

Preparing Your Model for Deployment

Step 1: Save Your Trained Model

After training your machine learning model, the first step towards deployment is saving it to a file. This allows you to load the trained model into your Flask application without retraining. We’ll use joblib, a popular choice for serializing large numpy arrays, which is common in machine learning models.

python

from sklearn.externals import joblib

# Assume `model` is your trained model variable

joblib.dump(model, ‘model.pkl’)

This code example saves your model to a file named model.pkl in the current directory. Ensure your trained model is saved before proceeding to the next steps.

Step 2: Load Your Model in Flask

Start by creating a new Python file for your Flask application, let’s name it app.py. This file will contain the code to initialize your Flask application and load your trained model using joblib.load.

python

from flask import Flask

from sklearn.externals import joblib

app = Flask(__name__)

model = joblib.load(“model.pkl”)

With these lines of code, you have created a Flask application instance and loaded your trained model for future predictions.

Setting Up Your Flask Application

Step 1: Define a Route for Predictions

A route in Flask is a URL endpoint that triggers a specific function when accessed. We will define a route /predict where users can send data to get predictions from your model.

python

@app.route(‘/predict’, methods=[‘POST’])

def predict():

# Function to return model predictions will be added here

return “Prepare to receive predictions”

This setup creates a foundation for receiving prediction requests. The next step involves processing these requests.

Step 2: Extracting Data from Requests

To make predictions, your model needs data. This data will be sent to the Flask application as part of the request. Flask’s request object allows you to extract this data easily.

python

from flask import request, jsonify

@app.route(‘/predict’, methods=[‘POST’])

def predict():

data = request.get_json(force=True) # Extract data from POST request

features = data[‘features’]

prediction = model.predict([features])

return jsonify(prediction=prediction.tolist())

Here, we assume the data sent to /predict includes a key feature, which contains the input features for making a prediction. The model’s prediction is then returned as a JSON response.

Testing Your Application Locally

Running Your Flask Application

To test your Flask application locally, run it using the following command in your terminal:

bash

FLASK_APP=app.py flask run

This command starts a local web server. By default, your app will be accessible at http://127.0.0.1:5000/.

Testing the Prediction Endpoint

To test the /predict endpoint, you can use Postman or a similar tool to send a POST request to http://127.0.0.1:5000/predict. The request should contain JSON data with the structure expected by your prediction function. If everything is set up correctly, you should receive a prediction in response.

Preparing for Production

Step 1: Ensuring Security

Security is paramount when deploying applications to production. Flask’s development server is not suitable for production. Here are a few tips to enhance security:

- Use a production WSGI server like Gunicorn.

- Set a strong SECRET_KEY in your Flask application.

- Enable HTTPS to encrypt data in transit.

Step 2: Environment Variables

Sensitive information and configuration that may vary between development and production should be managed using environment variables. This includes database URLs, secret keys, and any third-party API keys your application might use.

Deploying Your Flask Application

Option 1: Heroku

Heroku is a cloud platform that makes it easy to deploy Flask applications. Here’s a brief overview of deploying to Heroku:

Create a Procfile in your project’s root directory with the following content to tell Heroku how to run your app:

makefile

web: gunicorn app:app

Use the Heroku CLI to create a new app and push your code.

Option 2: AWS Elastic Beanstalk

AWS Elastic Beanstalk offers more control and scalability for your Flask application. The deployment process involves packaging your application and using the Elastic Beanstalk CLI or Management Console to deploy it.

Monitoring and Maintenance

Once deployed, it’s essential to monitor your application for any errors or performance issues. Use tools like Sentry, Loggly, or AWS CloudWatch to keep an eye on your application’s health. Regularly update your application’s dependencies and review its security posture to mitigate potential vulnerabilities.

Conclusion

Deploying a machine learning model with Flask is a rewarding process that bridges the gap between data science and practical application. By following this guide, you’ve learned how to prepare your model, set up a Flask application, test it locally, and deploy it to a production environment. Remember, deployment is an ongoing process. Continuously monitor, update, and maintain your application to ensure it remains secure, efficient, and valuable to its users.

Add comment