In today’s data-driven world, the quality and reliability of data play a crucial role in making informed decisions and gaining meaningful insights. Imagine a scenario where a business relies on flawed data for critical decisions; the consequences could be detrimental.

This is where data quality and validation come into play. In this blog post, we will explore the key concepts of data quality and validation along with exploring techniques that ensure data accuracy, completeness, and consistency.

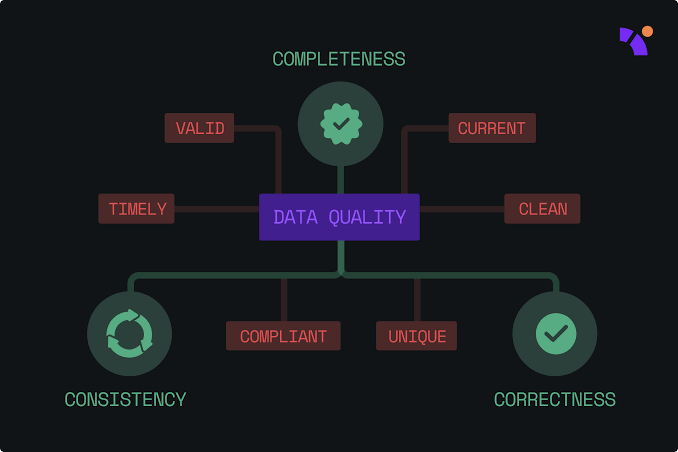

Understanding Data Quality

Data Accuracy

Data accuracy refers to the extent to which data correctly represents the real-world entities it describes. Ensuring accurate data is essential for trustworthy analysis and decision-making. Various factors can lead to data accuracy issues, including human errors during data entry, system glitches, or data integration problems. To illustrate, let’s consider a Python example to identify and correct data accuracy issues.

python

import pandas as pd

# Load the dataset

data = pd.read_csv(‘sales_data.csv’)

# Identify and correct data accuracy issues

data[‘price’] = data[‘price’].apply(lambda x: round(x, 2)) # Round prices to two decimal places

data[‘quantity’] = data[‘quantity’].apply(lambda x: max(0, x)) # Remove negative quantities

In this example, we’ve rounded prices to two decimal places and ensured that quantities are non-negative, addressing potential accuracy issues.

Data Completeness

Data completeness is the extent to which all required data points are present. Incomplete data can lead to biased analysis and incorrect conclusions. Common reasons for incomplete data include missing values, data extraction problems, or incomplete user inputs. Let’s explore another coding example that uses pandas in Python to detect and handle missing data.

python

# Check for missing values

missing_values = data.isnull().sum()

# Handle missing values

data.dropna(subset=[‘customer_name’], inplace=True) # Remove rows with missing customer names

data[‘order_date’].fillna(pd.to_datetime(‘today’), inplace=True) # Fill missing order dates with the current date

Here, we’ve removed rows with missing customer names and filled missing order dates with the current date, ensuring data completeness.

Data Consistency

Data consistency involves maintaining uniformity and coherence across datasets. Inconsistent data, such as different formats for the same attribute or duplicate records, can lead to confusion and errors in analysis. Let’s explore a SQL query to identify and eliminate duplicate records from a database.

sql

— Identify duplicate records based on customer email

SELECT customer_id, email, COUNT(*)

FROM customers

GROUP BY email

HAVING COUNT(*) > 1;

This query identifies duplicate customer records based on email addresses, allowing you to take corrective actions.

Techniques for Data Validation

Data Profiling

Data profiling involves analyzing and summarizing data to understand its characteristics and quality. It helps in identifying anomalies, inconsistencies, and patterns in the data. One popular tool for data profiling is pandas_profiling in Python. Let us see how we can generate a data profile using this tool.

python

from pandas_profiling import ProfileReport

# Generate data profile

profile = ProfileReport(data)

profile.to_file(“data_profile_report.html”)

This code generates a HTML report containing insights about the data’s structure, statistics, and potential issues.

Data Validation Rules

Defining data validation rules based on business requirements ensures that the data conforms to expected standards. Regular expressions are powerful tools for specifying validation patterns. Here’s an example of using regular expressions in Python to validate email addresses.

python

import re

def validate_email(email):

pattern = r’^[a-zA-Z0-9._%+-]+@[a-zA-Z0-9.-]+\.[a-zA-Z]{2,4}$’

return re.match(pattern, email) is not None

# Test the validation function

email = “example@email.com”

if validate_email(email):

print(“Valid email address”)

else:

print(“Invalid email address”)

This function uses a regular expression pattern to validate an email address’s format.

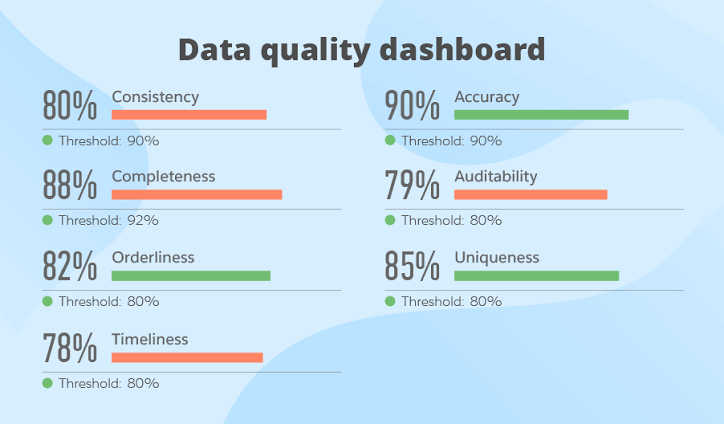

Data Quality Metrics

Quantifying data quality helps in measuring the effectiveness of validation efforts. Common data quality metrics include accuracy rate, completeness rate, and consistency index. Let’s calculate the accuracy rate using a simple Python example.

python

total_records = len(data)

accurate_records = len(data[data[‘is_correct’] == True])

accuracy_rate = (accurate_records / total_records) * 100

print(f”Accuracy Rate: {accuracy_rate:.2f}%”)

This code calculates and prints the accuracy rate based on a “is_correct” column in the dataset.

Implementing Data Quality Checks

When it comes to data quality checks, the following steps can be followed.

Pre-processing Steps

Effective data quality checks often involve data pre-processing techniques such as outlier detection and data transformation. Outliers are data points significantly different from others and can distort analysis results. Here’s an example of using scikit-learn in Python to detect outliers in a dataset.

python

from sklearn.ensemble import IsolationForest

# Detect outliers using Isolation Forest

clf = IsolationForest(contamination=0.05)

outliers = clf.fit_predict(data[[‘price’, ‘quantity’]])

data[‘is_outlier’] = outliers == -1

This code uses the Isolation Forest algorithm to detect outliers in the “price” and “quantity” columns.

Automated Data Validation

Automating data validation processes help in maintaining consistent data quality over time. Writing scripts or using dedicated tools can streamline validation tasks.

The following Python script demonstrates how to perform automated data validation on a sample dataset.

python

# Automated data validation script

def automated_data_validation(data):

# Data accuracy checks

data[‘price’] = data[‘price’].apply(lambda x: round(x, 2))

data[‘quantity’] = data[‘quantity’].apply(lambda x: max(0, x))

# Data completeness checks

data.dropna(subset=[‘customer_name’], inplace=True)

data[‘order_date’].fillna(pd.to_datetime(‘today’), inplace=True)

# Data consistency checks

duplicate_emails = data[data.duplicated(subset=[’email’])]

data.drop_duplicates(subset=[’email’], inplace=True)

return data

# Load and validate data

data = pd.read_csv(‘sales_data.csv’)

validated_data = automated_data_validation(data)

This script performs accuracy, completeness, and consistency checks on the dataset.

Data Quality Tools

Let us get into a brief on how we can go about choosing the right set of data quality tools.

Overview of Data Quality Tools

Various tools are available to assist with data quality management. These tools offer features like data profiling, cleansing, and validation. Some popular options include Trifacta, Talend, and OpenRefine. These tools help streamline data quality processes and enhance data accuracy and consistency.

Choosing the Right Tool

Selecting the appropriate data quality tool depends on your organization’s needs, data volume, and complexity. Consider factors like user-friendliness, scalability, and integration capabilities when evaluating tools. Perform a thorough assessment to determine the tool that aligns best with your data quality objectives.

Conclusion

In the data-driven landscape, data quality and validation are essential cornerstones. We’ve explored how ensuring accuracy, completeness, and consistency contributes to reliable decision-making and insights. From rounding values and addressing missing data to enforcing standardized formats, the journey through data quality principles has underscored their vital role.

Validation techniques, including crafting rules and utilizing data profiling tools, offer means to verify and enhance data integrity. Quantifying data quality through metrics provides measurable benchmarks for improvement. Automated validation scripts and tools further streamline these processes, making data quality an ongoing commitment.

Choosing the right data quality tool aligns with an organization’s goals. These tools offer profiling, cleansing, and validation capabilities, cementing their role in maintaining data excellence.

In sum, data quality and validation are foundational. They empower organizations to harness data’s full potential, driving innovation and growth, while ensuring informed decision-making in a competitive data-driven landscape.

Add comment