In today’s data-driven world, organizations are dealing with massive amounts of data, often referred to as Big Data. The exponential growth of data from various sources such as social media, sensors, and online transactions has created new challenges and opportunities for businesses. Analyzing and extracting meaningful insights from such large datasets can be a daunting task using traditional data processing techniques. Thankfully, there are powerful tools available, such as PySpark, that enable us to efficiently handle Big Data processing and analysis.

In this hands-on tutorial, we will explore the basics of working with Big Data using PySpark, along with practical examples and code snippets. We will cover essential concepts such as data loading, transformation, aggregation, and even machine learning with PySpark. Through these examples, you will gain a solid foundation in using PySpark to tackle Big Data challenges.

So, whether you are a data scientist, data engineer, or anyone working with large datasets, PySpark can be a valuable tool in your arsenal. By harnessing the power of distributed computing and the simplicity of Python, PySpark empowers you to efficiently process, analyze, and derive insights from Big Data. Let’s dive in and unlock the potential of PySpark in working with Big Data

What is PySpark?

PySpark, the Python library for Apache Spark, has emerged as a popular choice for processing and analyzing large datasets. Apache Spark is an open-source cluster computing framework that provides fast and distributed data processing capabilities. It allows us to process data in parallel across a cluster of computers, making it highly scalable and suitable for Big Data workloads. PySpark brings the ease and flexibility of Python programming to the distributed computing capabilities of Spark.

The key advantage of PySpark is its ability to leverage the distributed computing model to handle Big Data. It can efficiently process large datasets by distributing the workload across multiple nodes in a cluster, allowing for faster and more scalable data processing. PySpark also provides a high-level API that simplifies the development process, making it accessible to data scientists and analysts familiar with Python.

To get started with PySpark, you need to have Spark installed on your machine. Spark can be set up on a single machine for development and testing purposes or deployed on a cluster of machines for large-scale production environments. Once you have Spark installed, you can use PySpark by importing the necessary modules and functions.

Let’s dive into some examples to understand how PySpark works with Big Data.

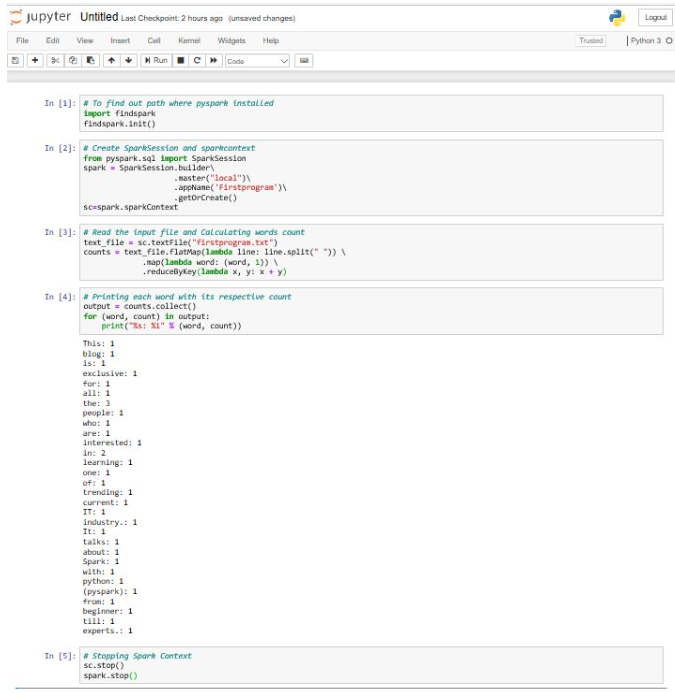

Example 1: Word Count

One of the classic examples of data processing is the word count problem. Let’s assume we have a large text document, and we want to count the occurrences of each word in the document. With PySpark, we can easily solve this problem in a distributed manner.

Python

Copy code

from pyspark.sql import SparkSession

# Create a SparkSession

spark = SparkSession.builder.appName(“WordCount”).getOrCreate()

# Load the text file

text_file = spark.read.text(“path/to/large_text_document.txt”)

# Split the lines into words

words = text_file.rdd.flatMap(lambda line: line.value.split())

# Count the occurrences of each word

word_counts = words.countByValue()

# Print the word counts

for word, count in word_counts.items():

print(f”{word}: {count}”)

# Stop the SparkSession

spark.stop()

In this example, we start by creating a SparkSession, which is the entry point for any Spark functionality. We then load the text file into a DataFrame using the read.text() function. Next, we split the lines into individual words using the flatMap() transformation.

Finally, we count the occurrences of each word using the countByValue() action and print the results.

Example 2: Data Aggregation

PySpark provides powerful aggregation functions that allow us to summarize and analyze large datasets. Let’s consider an example where we have a dataset containing information about sales transactions, and we want to calculate the total sales amount for each product category.

python

Copy code

from pyspark.sql import SparkSession

from pyspark.sql.functions import sum

# Create a SparkSession

spark = SparkSession.builder.appName(“DataAggregation”).getOrCreate()

# Load the sales data into a DataFrame

sales_data = spark.read.csv(“path/to/sales_data.csv”, header=True, inferSchema=True)

# Calculate the total sales amount by product category

sales_by_category = sales_data.groupBy(“category”).agg(sum(“amount”).alias(“total_sales”))

# Show the result

sales_by_category.show()

# Stop the SparkSession

spark.stop()

In this example, we load the sales data from a CSV file into a data frame. We then group the data by the “category” column and use the agg() function with the sum() aggregation function to calculate the total sales amount for each category. Finally, we display the result using the show() method.

Example 3: Machine Learning with PySpark

PySpark integrates seamlessly with Spark’s machine learning library, MLlib, allowing us to perform scalable and distributed machine learning tasks on Big Data. Let’s explore a simple example of training a linear regression model using PySpark.

python

Copy code

from pyspark.ml.feature import VectorAssembler

from pyspark.ml.regression import LinearRegression

from pyspark.ml.evaluation import RegressionEvaluator

# Create a SparkSession

spark = SparkSession.builder.appName(“LinearRegression”).getOrCreate()

# Load the dataset into a DataFrame

data = spark.read.csv(“path/to/dataset.csv”, header=True, inferSchema=True)

# Prepare the data for training

assembler = VectorAssembler(inputCols=[“feature1”, “feature2”, “feature3″], outputCol=”features”)

data = assembler.transform(data).select(“features”, “label”)

# Split the data into training and testing sets

train_data, test_data = data.randomSplit([0.7, 0.3])

# Train the linear regression model

lr = LinearRegression(featuresCol=”features”, labelCol=”label”)

model = lr.fit(train_data)

# Make predictions on the test data

predictions = model.transform(test_data)

# Evaluate the model’s performance

evaluator = RegressionEvaluator(labelCol=”label”, predictionCol=”prediction”, metricName=”rmse”)

rmse = evaluator.evaluate(predictions)

# Print the root mean squared error (RMSE)

print(“Root Mean Squared Error (RMSE):”, rmse)

# Stop the SparkSession

spark.stop()

In this example, we start by loading the dataset into a data frame. We then use the VectorAssembler to combine the input features into a single vector column called “features”. We select the “features” column and the target variable column (“label”) from the transformed data. Next, we split the data into training and testing sets using the randomSplit() method.

We proceed to define a linear regression model and fit it to the training data using the fit() method. Once the model is trained, we make predictions on the test data using the transform() method. Finally, we evaluate the model’s performance using the RegressionEvaluator and print the root mean squared error (RMSE) as a metric.

Conclusion

Working with Big Data using PySpark opens up a world of possibilities for processing, analyzing, and deriving insights from large datasets. In this tutorial, we have covered the basics of working with PySpark, including examples of word count, data aggregation, and machine learning.

PySpark’s distributed processing capabilities enable us to handle Big Data efficiently and perform complex computations in parallel across a cluster of machines. By leveraging the power of Spark’s ecosystem, we can tackle a wide range of data-related challenges and extract valuable information from massive datasets.

Remember to install Spark and set up a SparkSession to begin working with PySpark. Then, explore the extensive PySpark documentation and experiment with different functionalities to further enhance your understanding.

With PySpark, you have the tools at your disposal to unlock the potential of Big Data and make informed decisions based on powerful data analysis. Happy coding and exploring the world of PySpark!

Add comment