In today’s data-driven world, organizations face the challenge of managing and extracting insights from vast amounts of data. Data warehousing emerges as a powerful solution to consolidate and organize data, enabling efficient analysis and decision-making. In this comprehensive blog post, we will delve into the core concepts and architecture of data warehousing, explore advanced techniques, provide real-life code examples, and conclude with key takeaways.

Understanding Data Warehousing

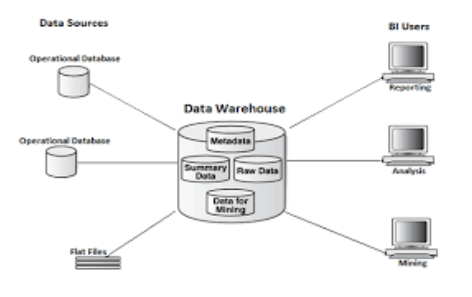

Data warehousing involves the process of integrating, transforming, and storing large volumes of structured and unstructured data from various sources into a centralized repository. Its primary objective is to provide a unified view of data for analysis, reporting, and decision support.

Key Concepts in Data Warehousing

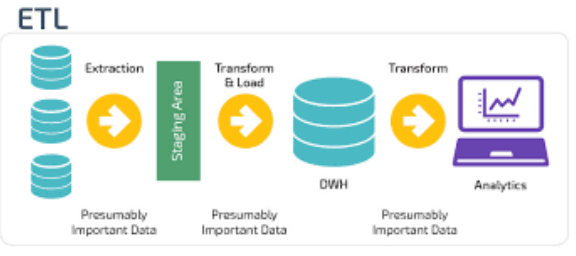

Extract, Transform, Load (ETL)

ETL plays a pivotal role in data warehousing. It encompasses three essential stages: extraction, transformation, and loading. During extraction, data is retrieved from disparate sources such as databases, files, or APIs. The extracted data is then transformed to ensure consistency, quality, and compatibility with the data warehouse schema. Finally, the transformed data is loaded into the data warehouse for storage and analysis.

Dimensional Modelling

Dimensional modelling is a design technique that organizes data into dimensions and facts. Dimensions represent the business entities or descriptive attributes, while facts are the measurable metrics or events associated with those dimensions. The star schema and snowflake schema are commonly used dimensional modelling approaches.

Star Schema

The star schema is a widely adopted dimensional modelling technique. It consists of a central fact table surrounded by dimension tables. The fact table contains the primary metrics or measures, while the dimension tables provide descriptive attributes related to those measures. The star schema simplifies queries, enhances performance, and facilitates intuitive data analysis.

Snowflake Schema

The snowflake schema extends the star schema by further normalizing dimension tables. In a snowflake schema, dimension tables are divided into multiple levels of detail, resulting in a more complex structure. Although it can improve data integrity and reduce redundancy, it may introduce additional complexity in query execution.

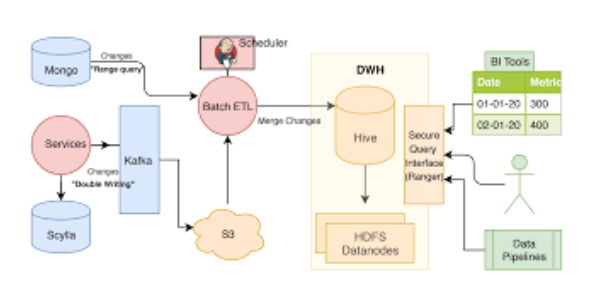

Data Warehousing Architecture

Data warehousing architecture comprises various components that collaborate to enable data integration, storage, and analysis. Let’s explore each component in detail and provide in-depth code examples.

Data Sources

Data can originate from diverse sources, such as relational databases, transactional systems, web services, or external APIs. Let’s consider an example where we extract data from a PostgreSQL database using Python.

python

import psycopg2

# Establish a connection to the PostgreSQL database

conn = psycopg2.connect(

host=”localhost”,

database=”mydatabase”,

user=”myuser”,

password=”mypassword”

)

# Extract data from a table

cursor = conn.cursor()

query = “SELECT * FROM sales”

cursor.execute(query)

data = cursor.fetchall()

cursor.close()

conn.close()

Extract, Transform, Load (ETL) Tools

ETL tools streamline the extraction, transformation, and loading processes. Here’s an example using Python’s pandas library to transform the extracted data.

python

import pandas as pd

# Transform data using pandas

df = pd.DataFrame(data, columns=[‘customer’, ‘product’, ‘quantity’])

transformed_data = df.groupby(‘product’)[‘quantity’].sum()

Data Warehouse

The data warehouse serves as the central repository for storing structured and organized data. Let’s create a basic data warehouse using Apache Hive.

Sql

— Create a table for storing sales data

CREATE TABLE sales (

customer STRING,

product STRING,

quantity INT

) ROW FORMAT DELIMITED

FIELDS TERMIN

vbnet

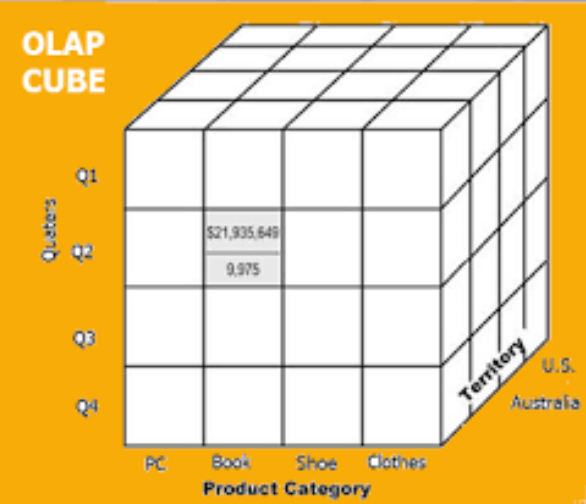

OLAP Cube

OLAP (Online Analytical Processing) cubes provide advanced multidimensional analysis capabilities. Let’s explore the creation of an OLAP cube using the `pycubelib` library in Python.

python

from pycubelib import Cube

# Create an OLAP cube

cube = Cube()

cube.add_dimension(‘Time’)

cube.add_dimension(‘Product’)

cube.add_dimension(‘Region’)

cube.add_measure(‘Quantity’)

cube.add_measure(‘Revenue’

Data Mart

A data mart is a subset of the data warehouse specifically designed to serve a particular department, business function, or user group. It focuses on providing tailored data for specific analytical purposes. Let’s create a data mart table for regional sales using SQL

sql

— Create a data mart table for regional sales

CREATE TABLE sales_by_region (

region_id INT,

region_name VARCHAR(50),

product_id INT,

product_name VARCHAR(50),

total_sales INT,

total_revenue DECIMAL(10, 2)

);

Data Integration

Data integration involves combining data from various sources into a unified view within the data warehouse. This process ensures data consistency and facilitates comprehensive analysis. Here’s an example of integrating data from multiple CSV files using Python.

python

import pandas as pd

# Read and merge multiple CSV files

files = [‘file1.csv’, ‘file2.csv’, ‘file3.csv’]

dfs = [pd.read_csv(file) for file in files]

merged_data = pd.concat(dfs)

Data Quality and Governance

Data quality and governance are crucial aspects of data warehousing. Ensuring data accuracy, completeness, consistency, and adherence to regulatory standards is essential. Data profiling, validation, and cleansing techniques play a significant role in maintaining data quality.

Real-Life Code Examples

ETL Process with Apache Spark

Apache Spark is a powerful framework for big data processing. Here’s an example of an ETL process using Spark’s DataFrame API:

python

from pyspark.sql import SparkSession

# Create a SparkSession

spark = SparkSession.builder.appName(‘ETLExample’).getOrCreate()

# Extract data from a CSV file

df = spark.read.csv(‘data.csv’, header=True, inferSchema=True)

# Transform data

transformed_df = df.groupBy(‘product’).sum(‘quantity’)

# Load data into the data warehouse

transformed_df.write.mode(‘overwrite’).jdbc(

url=’jdbc:postgresql://localhost/mydatabase’,

table=’sales’,

properties={‘user’: ‘myuser’, ‘password’: ‘mypassword’}

)

Advanced Analysis with OLAP Cube:

Utilize the OLAP cube to perform advanced analysis using Python’s pycubelib library:

python

Copy code

from pycubelib import Cube

# Create an OLAP cube

cube = Cube()

cube.add_dimension(‘Time’)

cube.add_dimension(‘Product’)

cube.add_measure(‘Quantity’)

# Query the cube for total quantity by product

result = cube.query([

{‘dimension’: ‘Product’, ‘hierarchy’: ‘default’, ‘level’: ‘default’},

{‘measure’: ‘Quantity’, ‘function’: ‘SUM’}

])

print(result)

Conclusion

Data warehousing is a foundational pillar for effective data management and analysis. In this comprehensive blog post, we explored the key concepts of data warehousing, including ETL processes, dimensional modeling (such as star schema and snowflake schema), and the importance of data integration, quality, and governance. Additionally, we delved into the architecture of data warehousing, covering data sources, ETL tools, the data warehouse itself, OLAP cubes, data marts, data integration techniques, and provided real-life code examples to showcase their practical implementations.

By utilizing the concepts and techniques discussed in this blog, organizations can effectively manage and analyze their data assets, enabling informed decision-making and gaining a competitive edge in today’s data-driven landscape.

Remember, data warehousing is a vast and evolving field, and there are numerous advanced techniques, tools, and optimizations beyond the scope of this blog. It is essential to continuously explore and stay updated with the latest advancements in data warehousing to maximize its potential.

In conclusion, data warehousing empowers organizations to unlock valuable insights from their data by providing a centralized, structured, and optimized environment for analysis and reporting. With a strong foundation in concepts like ETL processes, dimensional modeling, data integration, and data governance, combined with the utilization of powerful tools and technologies, businesses can make informed decisions, identify trends, and gain a competitive advantage in today’s data-driven world.

So, dive deeper into the world of data warehousing, explore its vast possibilities, and leverage its capabilities to transform your organization’s data into a strategic asset.

Happy data warehousing!

Add comment