In the world of big data and analytics, the efficient orchestration of data pipelines has become a cornerstone for organizations aiming to extract actionable insights from their vast datasets. Managing these intricate workflows manually is an intricate dance fraught with challenges. In response, a variety of data orchestration tools, with Apache Airflow at the forefront, have emerged to streamline and automate these processes. In this blog, we will explore the depth of data pipeline orchestration, shed light on Apache Airflow’s capabilities, dive into alternatives, and outline best practices, real-world use cases, and future trends. So let us get started.

The Essence of Data Pipeline Orchestration

At its core, data pipeline orchestration is about choreographing the movement and transformation of data across different stages—from its origin through processing to its final destination. In a rapidly evolving digital landscape, where data fuels decision-making, orchestrating these workflows becomes indispensable. Without proper coordination, the risk of errors, delays, and inefficiencies looms large.

Key Components and Challenges

Key Components

Source: The point of origin for raw data.

Processing: Where data undergoes transformations and computations.

Destination: The endpoint where processed data is stored or analyzed.

Challenges of Manual Management

Error-Prone: Manual coordination introduces the risk of human errors.

Time-Consuming: As data volumes grow, manual processes become time-consuming.

Scalability: Scaling up becomes a formidable challenge.

Benefits of Orchestration Tools

Orchestration tools alleviate these challenges by automating the coordination of tasks. They bring a slew of benefits, including enhanced visibility into workflows, efficient scheduling and execution of tasks, and real-time monitoring capabilities.

Apache Airflow in Action

Unraveling Apache Airflow’s Architecture

Apache Airflow stands out as a powerful open-source platform designed to tackle the intricacies of workflow orchestration. At its heart is the concept of Directed Acyclic Graphs (DAGs), which define the sequence and dependencies of tasks.

Navigating Airflow’s Web UI

Airflow’s web-based UI serves as the control center for DAG management. From visualizing workflows to inspecting task logs and manually triggering or pausing tasks, the UI provides an intuitive interface for both data engineers and data scientists.

Example: Crafting a DAG in Airflow

Let us construct a DAG that performs a daily data processing task using a Python function.

python

from airflow import DAG

from airflow.operators import PythonOperator

from datetime import datetime, timedelta

default_args = {

‘owner’: ‘data_engineer’,

‘depends_on_past’: False,

‘start_date’: datetime(2023, 1, 1),

’email_on_failure’: False,

’email_on_retry’: False,

‘retries’: 1,

‘retry_delay’: timedelta(minutes=5),

}

dag = DAG(

‘example_dag’,

default_args=default_args,

description=’An example DAG’,

schedule_interval=timedelta(days=1),

)

def data_processing_function(**kwargs):

# Your data processing logic here

pass

task = PythonOperator(

task_id=’data_processing_task’,

python_callable=data_processing_function,

provide_context=True,

dag=dag,

)

In this example, we define a DAG named ‘example_dag’ that runs daily, with a single task named ‘data_processing_task’ calling the data_processing_function.

Alternatives to Apache Airflow

While Apache Airflow is a juggernaut in the orchestration space, exploring alternative tools offers a more nuanced understanding of what suits specific use cases. Two notable alternatives are Apache NiFi and Luigi.

Apache NiFi: Streamlining Data Flows

Apache NiFi focuses on automating data flows between systems. Its user-friendly interface simplifies the design of data flows, making it particularly suitable for scenarios involving diverse data sources.

Luigi: A Pythonic Approach to Workflow Management

Luigi takes a Pythonic approach, allowing users to define workflows using Python classes. Its simplicity and flexibility make it an attractive choice for certain data pipeline scenarios, especially those with a more programmatic touch.

Example: Defining a Luigi Workflow

Let us create a Luigi workflow that performs a simple data transformation.

python

import luigi

class DataTransformationTask(luigi.Task):

def run(self):

# Your data transformation logic here

pass

class MyWorkflow(luigi.WrapperTask):

def requires(self):

return DataTransformationTask()

In this example, we define a Luigi task (DataTransformationTask) and a workflow (MyWorkflow) that orchestrates the task.

Choosing the Right Tool

Selecting the appropriate tool depends on factors like team expertise, organizational requirements, and the nature of the data workflows. While Airflow’s DAG-based approach suits complex workflows, NiFi’s visual interface might be preferable for scenarios involving multiple data sources.

Best Practices for Data Pipeline Orchestration

Nurturing Efficient Workflows

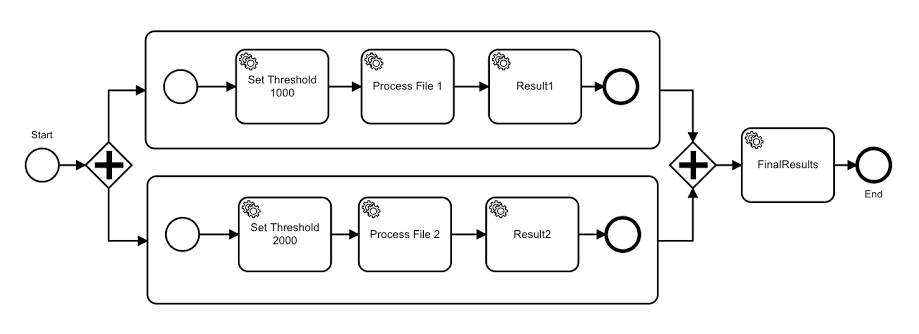

Parallelization: Break down workflows into smaller, parallelizable tasks.

Task Concurrency: Adjust settings to control the number of tasks executed simultaneously.

Managing Dependencies

Explicit Dependencies: Clearly define task dependencies in DAGs.

Trigger Rules: Use trigger rules to specify task behavior based on dependency status.

Handling Failures

Retry Policies: Configure tasks to automatically attempt reruns in case of failures.

Error Handling: Implement mechanisms to gracefully handle failures and notify stakeholders.

Monitoring and Debugging

Logging: Leverage logging within tasks for monitoring and debugging.

Airflow UI: Regularly monitor the Airflow web UI for workflow status and issue investigation.

Exploring Use Cases

Unveiling Orchestrated Success

Use Case 1: ETL Automation

A major e-commerce company utilized Apache Airflow to automate their ETL processes. The orchestrated workflows seamlessly extracted data from various sources, applied transformations, and loaded cleansed data into a centralized warehouse, resulting in a more streamlined and timely processing pipeline.

Use Case 2: Batch Processing in Finance

A financial institution opted for Apache NiFi to handle batch processing of financial transactions. NiFi’s visual interface facilitated the design of intricate data flows, ensuring accurate and efficient processing of financial data on a daily basis.

Use Case 3: Machine Learning Pipelines with Luigi

A research organization adopted Luigi for orchestrating machine learning pipelines. Luigi’s programmatic approach allowed the team to define and manage workflows for data preprocessing, model training, and result evaluation, improving the reproducibility of their machine learning experiments.

Example: Machine Learning Workflow with Luigi

python

import luigi

class DataPreprocessingTask(luigi.Task):

def run(self):

# Data preprocessing logic for machine learning

pass

class ModelTrainingTask(luigi.Task):

def requires(self):

return DataPreprocessingTask()

def run(self):

# Model training logic

pass

class EvaluationTask(luigi.Task):

def requires(self):

return ModelTrainingTask()

def run(self):

# Evaluation logic

pass

class MachineLearningWorkflow(luigi.WrapperTask):

def requires(self):

return EvaluationTask()

In this example, we define tasks for data preprocessing, model training, and evaluation, creating a machine learning workflow with Luigi.

Future Trends in Data Pipeline Orchestration

Navigating Tomorrow’s Landscape

Kubernetes Integration

The integration of orchestration tools with Kubernetes is gaining traction, providing enhanced scalability and resource utilization.

Serverless Computing

The rise of serverless computing is reshaping orchestration, allowing organizations to optimize costs and focus on building workflows rather than managing infrastructure.

Machine Learning Orchestration

With machine learning becoming ubiquitous, orchestration tools are evolving to better support the deployment and management of machine learning models in production, as evident in tools like Apache Airflow.

Conclusion

In conclusion, the orchestration of data pipelines is not just a necessity; it is a strategic imperative. Apache Airflow, along with its alternatives, offers a powerful arsenal for organizations seeking to conquer the complexities of modern data workflows. By following best practices, learning from real-world use cases, and staying attuned to future trends, organizations can forge resilient, efficient, and future-ready data infrastructures that pave the way for data-driven success in an ever-evolving landscape.

Add comment