In the contemporary digital era, the proliferation of data across various sectors has necessitated the development of sophisticated data management and processing strategies. Among these, the concept of multi-cloud data pipelines has emerged as a pivotal element in the realm of big data and cloud computing.

In the contemporary digital era, the proliferation of data across various sectors has necessitated the development of sophisticated data management and processing strategies. Among these, the concept of multi-cloud data pipelines has emerged as a pivotal element in the realm of big data and cloud computing.

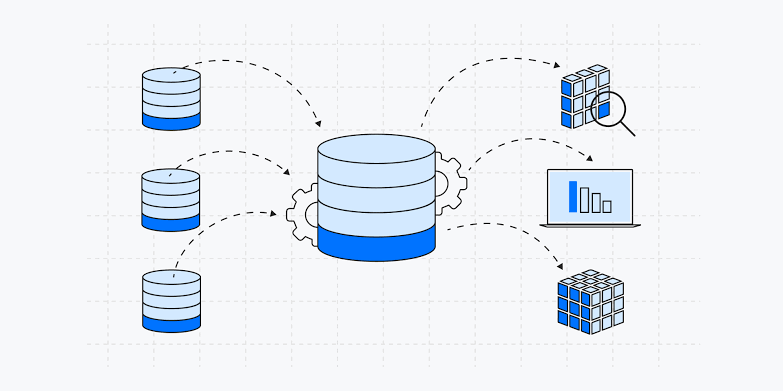

Businesses and organizations are increasingly adopting diverse cloud services from multiple providers to enhance their operational flexibility and data handling capabilities. However, this shift towards a multi-cloud strategy introduces a set of unique challenges, particularly in the context of building and maintaining efficient data pipelines. These pipelines, which are crucial for the automated movement, processing, and analysis of data, must now be designed to seamlessly integrate and function across various cloud platforms, each with its own set of APIs, services, and data storage solutions.

In this blog, we look into the complexities of constructing data pipelines in multi-cloud environments. We explore the challenges that arise in such settings, discuss architectural considerations, highlight key technologies and tools, and provide practical solutions and code examples to address these challenges effectively.

What are Multi-Cloud Data Pipelines?

Data pipelines in a multi-cloud environment are sophisticated systems designed to transport and transform data across different cloud services. They involve a series of steps, including data collection, processing, storage, and analysis, often utilizing various tools and platforms. The key difference in a multi-cloud scenario is the need for pipelines to be adaptable and compatible with different cloud providers’ APIs, services, and data management protocols. This adaptability ensures a seamless flow of data, regardless of its origin or destination.

Challenges in Building Multi-Cloud Data Pipelines

Constructing these pipelines comes with unique challenges:

Data Integration: Harmonizing disparate data formats and structures from various clouds is a daunting task. Ensuring data consistency and integrity requires robust ETL (Extract, Transform, Load) processes.

Latency and Performance: Data transfer between clouds can introduce latency. Efficient pipeline design minimizes these delays through optimized data routing and processing strategies.

Security and Compliance: Navigating the varied security protocols and compliance standards of different clouds is essential for protecting sensitive data and adhering to legal requirements.

Vendor Lock-in and Portability: Avoiding dependency on a single cloud provider’s tools and ensuring the ability to move data and applications between clouds is crucial for operational flexibility.

Architectural Considerations

Architecting a multi-cloud data pipeline requires careful planning:

Designing for Scalability: To handle data growth, architectures must be scalable. This often involves cloud-native features like elastic resource allocation and auto-scaling groups.

Ensuring Reliability and Fault Tolerance: Techniques like data mirroring, redundancy, and automated failover processes ensure high availability and data integrity.

Multi-Cloud Management: Tools for infrastructure as code, such as Terraform, facilitate consistent management across clouds. For example, Terraform scripts can automate the deployment of resources in different clouds, maintaining consistency in the configuration.

hcl

resource “aws_s3_bucket” “data_bucket” {

bucket = “my-data-bucket”

acl = “private”

}

resource “google_storage_bucket” “gcp_data_bucket” {

name = “my-gcp-data-bucket”

location = “US”

}

Key Technologies and Tools

Tools like Apache NiFi and Apache Airflow are popular for building multi-cloud data pipelines:

Apache NiFi Example:

Apache NiFi simplifies data flow between systems. Below is an example of a NiFi processor script that reads, processes, and writes data:

java

public class CustomProcessor extends AbstractProcessor {

@Override

public void onTrigger(ProcessContext context, ProcessSession session) {

FlowFile flowFile = session.get();

if ( flowFile == null ) {

return;

}

// Process the flowfile content

flowFile = session.write(flowFile, new OutputStreamCallback() {

@Override

public void process(OutputStream out) throws IOException {

// Implement your processing logic here

}

});

session.transfer(flowFile, SUCCESS);

}

}

Integration and Orchestration: Apache Airflow can orchestrate complex workflows. A simple DAG (Directed Acyclic Graph) in Airflow might look like this:

python

from airflow import DAG

from airflow.operators.dummy_operator import DummyOperator

from datetime import datetime

default_args = {

‘owner’: ‘airflow’,

‘start_date’: datetime(2024, 1, 1),

}

dag = DAG(‘multi_cloud_pipeline’, default_args=default_args, schedule_interval=’@daily’)

start = DummyOperator(task_id=’start’, dag=dag)

end = DummyOperator(task_id=’end’, dag=dag)

start >> end

Implementing Best Practices in Security and Compliance

Ensuring the security and compliance of data pipelines involves:

Data Encryption: Use encryption standards like AES for data at rest and TLS for data in transit. Cloud providers offer tools like AWS KMS or Google Cloud KMS for key management.

Identity and Access Management (IAM): Properly configuring IAM roles and policies is critical. For instance, in AWS, IAM policies define permissions for actions on specific resources.

Regular Audits and Compliance Checks: Regular audits using tools like AWS Config or Azure Policy ensure adherence to compliance standards.

Monitoring and Optimization

Effective monitoring and optimization practices include:

Setting up Monitoring: Implement monitoring using solutions like Amazon CloudWatch, Google Operations Suite, or third-party tools to track pipeline performance and data quality.

Performance Tuning: Optimize data processing by selecting efficient data storage formats (e.g., Parquet) and implementing data partitioning strategies.

Cost Management: Utilize cost management tools provided by cloud providers to monitor usage and optimize costs. For example, AWS Cost Explorer helps in analyzing and identifying cost-saving opportunities.

Advanced Data Transformation Techniques

In multi-cloud data pipelines, advanced data transformation techniques are often required to ensure data compatibility and maximize its utility across different cloud platforms. These transformations can range from simple format changes to complex aggregations and analytics.

Data Transformation Example using Apache Spark:

Apache Spark is widely used for large-scale data processing. Below is an example of using Spark to perform data transformation:

python

from pyspark.sql import SparkSession

from pyspark.sql.functions import col

# Initialize a Spark session

spark = SparkSession.builder.appName(“MultiCloudDataTransformation”).getOrCreate()

# Load data into a DataFrame

df = spark.read.csv(“s3://my-bucket/input-data.csv”, header=True, inferSchema=True)

# Perform data transformation

transformed_df = df.withColumn(“new_column”, col(“existing_column”) * 2)

# Write transformed data to another cloud storage

transformed_df.write.parquet(“gs://my-gcs-bucket/transformed-data/”)

This example demonstrates reading data from an AWS S3 bucket, performing a simple transformation, and then writing the transformed data to a Google Cloud Storage bucket, illustrating a typical use case in a multi-cloud environment.

Real-World Applications Across Industries

Real-world applications of multi-cloud data pipelines demonstrate their practicality and efficiency:

E-commerce Integration: An e-commerce company integrated customer data from various cloud sources into a central analytics platform, enhancing customer insights and enabling personalized marketing strategies.

Healthcare Data Management: A healthcare provider implemented a secure, compliant multi-cloud pipeline to manage patient data, ensuring privacy and meeting various regional healthcare regulations.

Conclusion

Building and managing data pipelines in multi-cloud environments, though complex, is an essential task for modern businesses. By embracing the right strategies, tools, and practices, organizations can achieve efficient, secure, and scalable data management across multiple cloud platforms. As cloud technologies continue to evolve, staying informed and adaptable is key to navigating this dynamic landscape successfully.

Add comment