In today’s fast-paced digital landscape, the ability to process and analyze data in real-time has become a crucial factor for the success of businesses. Real-time data processing allows organizations to make immediate, data-driven decisions, gaining a competitive edge in various industries. In this blog, we will look into the fundamentals of real-time data processing and how efficient data pipelines can streamline this process. We will also dive into the key components and showcase code examples for better understanding.

What is Real-time Data Processing?

Real-time data processing involves handling and analyzing data as soon as it is generated. Unlike batch processing, which collects and processes data in predefined intervals, real-time processing provides instant insights. This is especially critical in scenarios where quick decision-making is paramount, such as financial transactions, monitoring systems, and online user interactions.

The significance of real-time data processing lies in its ability to offer up-to-the-minute information, enabling businesses to respond promptly to changing conditions. This agility enhances customer experiences, optimizes operations, and facilitates proactive decision-making.

Comparison with Batch Processing

In batch processing, data is collected over a period and processed in chunks. While this approach is suitable for certain scenarios, real-time processing offers a more immediate and continuous analysis of data. The comparison is not always black and white, and the choice between batch and real-time processing depends on the specific needs of the business. However, the shift towards real-time processing is evident in industries where timely insights are critical.

Use Cases

Real-time data processing finds application in various industries. For instance:

Finance: Detecting fraudulent transactions in real-time.

Healthcare: Monitoring patient vitals and triggering alerts for immediate attention.

E-commerce: Personalizing user experiences based on real-time behavior.

IoT: Analyzing sensor data for instant decision-making in smart environments.

Key Components of Real-time Data Processing

Data Producers

Data producers are the sources that generate real-time data. These can be sensors, applications, user interactions, or any system producing continuous streams of information. Examples of this include IoT devices emitting sensor data, application logs, or social media feeds.

Message Brokers

Message brokers act as intermediaries facilitating communication between data producers and consumers. Apache Kafka is a widely used distributed streaming platform that excels in managing the flow of real-time data. It allows seamless communication between different components of a data processing pipeline.

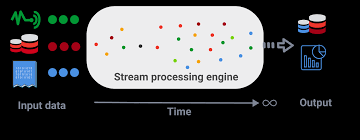

Stream Processing Engines

Stream processing engines are tools designed for the real-time analysis of streaming data. They enable the processing of data as it flows through the system. Examples for this include Apache Flink, Apache Storm, and Spark Streaming. These engines empower organizations to derive insights from data in motion.

Data Consumers

Data consumers are the endpoints that utilize the processed data. This can include databases, analytics systems, dashboards, or other downstream applications. The efficiency of real-time data processing is measured not only by the speed of analysis but also by the timely delivery of relevant information to consumers.

Challenges in Real-time Data Processing

Real-time data processing comes with its set of challenges that organizations need to address for seamless operation.

Latency Issues

Latency, or the delay between data generation and its availability for analysis, is a critical concern. High latency can impact the relevance of insights, especially in time-sensitive applications. Minimizing latency requires optimizing every component of the data processing pipeline.

Scalability Concerns

As data volumes increase, the system must scale to handle the load. Scalability is crucial for accommodating growing data streams and ensuring that the processing infrastructure can handle the load without degradation in performance.

Fault Tolerance and Reliability

In real-time processing, systems must be resilient to failures. Ensuring fault tolerance and reliability is essential to prevent data loss and maintain the integrity of the processing pipeline. Redundancy, monitoring, and failover mechanisms play a key role in achieving high reliability.

Streamlining Data Pipelines with Apache Kafka

Apache Kafka, an open-source distributed event streaming platform, is designed to address the challenges of real-time data processing. Kafka provides a scalable and fault-tolerant solution for handling streams of data. It is characterized by its distributed architecture and publish-subscribe model.

Kafka in Real-time Data Processing

In a typical data processing pipeline using Kafka:

- Producers send data to Kafka topics.

- Consumers subscribe to these topics and process the incoming data.

- Kafka ensures the reliable and fault-tolerant transportation of data between producers and consumers.

- Setting Up a Basic Kafka Pipeline (Python example below)

Let us consider a simple Python example for producing messages to a Kafka topic using the kafka library:

python

from kafka import KafkaProducer

producer = KafkaProducer(bootstrap_servers=’your_kafka_server’)

topic = ‘your_topic’

for i in range(10):

message = f”Message {i}”

producer.send(topic, value=message.encode(‘utf-8’))

producer.close()

a producer sends ten messages to a specified topic.

Integrating Stream Processing Engines

Popular Stream Processing Engines

- Several stream processing engines enhance the capabilities of real-time data processing.

- Apache Flink is one such engine known for its powerful and expressive APIs.

Apache Flink for Real-time Processing

Apache Flink enables the creation of sophisticated stream processing applications. Here’s a simple example using Apache Flink in Java to convert incoming text messages to uppercase:

java

// Sample Java code for a simple Flink job

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

DataStream<String> stream = env

.socketTextStream(“localhost”, 9999)

.map(String::toUpperCase);

stream.print();

env.execute(“Simple Flink Job”);

This Flink job reads text messages from a socket and transforms them to uppercase before printing the results.

Ensuring Reliability and Scalability

Fault Tolerance with Kafka (Python Example below)

Kafka provides features for fault tolerance. Consumers can commit their offsets, and in case of failure, they can resume from the last committed offset. Here’s an example:

python

# Sample Python code for Kafka consumer with fault tolerance

from kafka import KafkaConsumer

consumer = KafkaConsumer(‘your_topic’,

group_id=’your_consumer_group’,

bootstrap_servers=’your_kafka_server’,

enable_auto_commit=True,

auto_offset_reset=’earliest’)

for message in consumer:

print(message.value.decode(‘utf-8’))

In this example, the consumer commits offsets automatically, ensuring it resumes from the last committed offset in case of a failure.

Scalability Considerations

Scalability is a crucial aspect of real-time data processing. Kafka’s distributed nature allows it to scale horizontally, handling increased loads by adding more brokers. Ensuring that the stream processing engine can scale with the growing data volume is equally important.

Conclusion

Real-time data processing is a game-changer in today’s data-driven world. Understanding its components, overcoming challenges, and leveraging tools like Apache Kafka and stream processing engines are essential for building efficient data pipelines. By streamlining data pipelines, organizations can extract valuable insights in real-time, empowering them to make informed decisions and stay competitive in an ever-evolving landscape. Embracing these technologies is not just a technological advancement but a strategic move towards a more agile and responsive business ecosystem.

Add comment