Data preprocessing is a crucial initial phase of the data analysis process. It encompasses several essential steps, including data cleaning, transformation, and normalization. The goal of data preprocessing is to prepare raw data in a way that enhances its quality, accuracy, and suitability for subsequent analysis and modeling. In this blog, we will explore each of these steps in detail and explore practical code examples using Python along with the pandas and scikit-learn libraries.

Data Cleaning

Data cleaning involves identifying and rectifying errors, inconsistencies, and inaccuracies in the dataset. This process ensures that the data is reliable and devoid of any anomalies that might adversely affect downstream analyses.

Handling Missing Values

Missing values are a common occurrence in real-world datasets and can result from a variety of factors such as data collection errors or data entry oversights. Addressing missing values is crucial to prevent biased or incomplete analyses. In Python, you can use the pandas library to detect and handle missing values.

python

import pandas as pd

# Load the dataset

data = pd.read_csv(‘data.csv’)

# Identify missing values

missing_values = data.isnull().sum()

# Handle missing values (e.g., fill with mean)

data[‘column_name’].fillna(data[‘column_name’].mean(), inplace=True)

Removing Duplicates

Duplicate rows can introduce redundancy and distort analysis results. Identifying and removing duplicates ensures that each data point is unique and contributes meaningfully to the analysis.

python

# Remove duplicate rows

data.drop_duplicates(inplace=True)

Data Transformation

Data transformation involves reshaping or converting data into a more suitable format for analysis. This step often includes encoding categorical variables, scaling numerical features, and even extracting new features from existing ones.

Encoding Categorical Variables

Categorical variables, such as “Gender” or “Country,” need to be encoded into numerical values to be compatible with machine learning algorithms. One-hot encoding is a widely used technique that creates binary columns for each category, representing its presence or absence.

python

# Convert categorical variables to numerical using one-hot encoding

encoded_data = pd.get_dummies(data, columns=[‘categorical_column’])

Scaling Numerical Features

Numerical features can have varying scales, which might lead to biased analyses or models that are sensitive to the scale of input data. Scaling these features helps ensure that they are on a similar scale.

python

from sklearn.preprocessing import StandardScaler

# Initialize scaler

scaler = StandardScaler()

# Scale numerical features

scaled_features = scaler.fit_transform(data[[‘feature1’, ‘feature2’]])

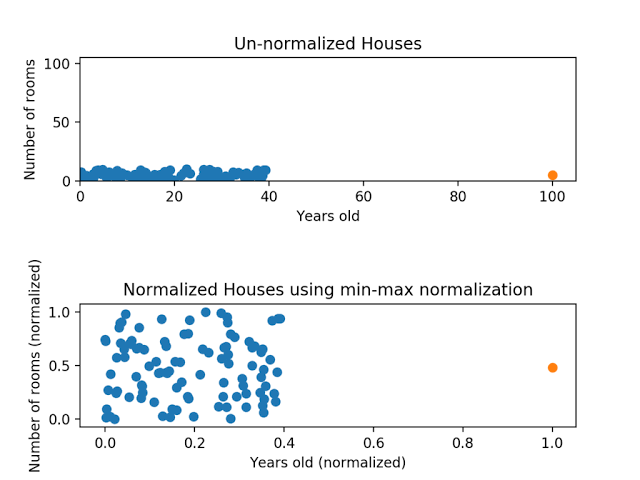

Data Normalization

Data normalization involves rescaling features to a specific range, typically between 0 and 1. This step is especially crucial when working with algorithms that rely on distance measures, such as K-means clustering or neural networks.

Min-Max Normalization

Min-Max normalization scales the data so that it falls within a specified range, often [0, 1]. This ensures that all features have a consistent scale and are not dominated by those with larger values.

python

# Min-Max normalization

normalized_data = (data – data.min()) / (data.max() – data.min())

Z-Score Normalization

Z-Score normalization, also known as Standard Score normalization, standardizes the data to have a mean of 0 and a standard deviation of 1. This transformation is particularly useful for Gaussian distribution-based algorithms.

python

# Z-Score normalization

normalized_features = (data – data.mean()) / data.std()

Enhancing Data Analysis through Preprocessing

Data preprocessing significantly contributes to the success and reliability of data analysis. It ensures that the data is well-conditioned, allowing subsequent analyses and modeling to yield more accurate and meaningful results. Let’s explore how each preprocessing step improves the quality of data analysis with practical examples.

Handling Missing Values

Consider a dataset containing information about customer purchases, including product name, price, and quantity. Some entries in the “price” column are missing.

By employing techniques to handle missing values, such as filling missing values with the mean or median of the column, you ensure that your analysis accurately reflects the overall purchasing behavior. Without addressing missing values, your analysis might be biased, leading to inaccurate insights.

python

import pandas as pd

# Load the dataset

data = pd.read_csv(‘customer_purchases.csv’)

# Calculate mean of the ‘price’ column

mean_price = data[‘price’].mean()

# Fill missing values in ‘price’ with the mean

data[‘price’].fillna(mean_price, inplace=True)

Now you can proceed with your analysis using the cleaned data

Scaling Numerical Features

Imagine you are analyzing a dataset that includes both the income of individuals and the number of years of education they have completed. These features have vastly different scales.

If you fail to scale these features appropriately, the income, which typically has larger values, may disproportionately influence the analysis. Scaling these features using techniques like StandardScaler ensures that both income and education contribute meaningfully to the analysis, preventing one from overshadowing the other.

python

from sklearn.preprocessing import StandardScaler

import pandas as pd

# Load the dataset

data = pd.read_csv(‘income_education.csv’)

# Initialize scaler

scaler = StandardScaler()

# Scale ‘income’ and ‘education’ features

scaled_features = scaler.fit_transform(data[[‘income’, ‘education’]])

# Create a new DataFrame with scaled features

scaled_data = pd.DataFrame(scaled_features, columns=[‘scaled_income’, ‘scaled_education’])

Now you can proceed with your analysis using the scaled data.

Data Normalization

Suppose you are working on a dataset containing medical measurements, such as blood pressure and heart rate, for patients. These measurements may have different units and scales.

Applying data normalization techniques like Min-Max or Z-Score ensures that these measurements are treated equally in your analysis. This is particularly crucial when using algorithms that rely on distance calculations, as normalizing the data prevents any single measurement from dominating the analysis due to its scale.

python

import pandas as pd

# Load the dataset

data = pd.read_csv(‘medical_measurements.csv’)

# Apply Min-Max normalization to ‘blood_pressure’ and ‘heart_rate’ columns

data[‘normalized_blood_pressure’] = (data[‘blood_pressure’] – data[‘blood_pressure’].min()) / (data[‘blood_pressure’].max() – data[‘blood_pressure’].min())

data[‘normalized_heart_rate’] = (data[‘heart_rate’] – data[‘heart_rate’].min()) / (data[‘heart_rate’].max() – data[‘heart_rate’].min())

Now you can proceed with your analysis using the normalized data

Conclusion

Data preprocessing is an indispensable phase that lays the groundwork for effective and insightful data analysis. By addressing missing values, scaling features, and normalizing data, you ensure that your analysis is based on accurate, unbiased, and appropriately scaled information. The examples provided in this blog demonstrate how each preprocessing step contributes to the quality of analysis, leading to more reliable insights and informed decision-making. Mastery of data preprocessing empowers data analysts and scientists to unlock the true potential of their datasets and derive valuable knowledge that drives impactful outcomes.

Add comment