In this digital age, the deluge of data from a myriad of sources has redefined the boundaries of what’s possible. To extract valuable insights from vast datasets, specialized tools and frameworks are essential. In this blog post, we will dive into two pivotal technologies that have revolutionized the landscape of big data processing: Apache Hadoop and Apache Spark.

A Brief Introduction to Big Data Processing

The 21st century has witnessed an unparalleled data explosion, fueled by the proliferation of connected devices, social media interactions, and online transactions. Traditional data processing tools, designed for relatively modest volumes, are ill-equipped to handle the scale, complexity, and real-time nature of this data. This monumental shift has given rise to big data processing which is a paradigm that leverages advanced tools and methodologies to efficiently manage and analyze massive datasets.

Apache Hadoop: Transforming Data Processing

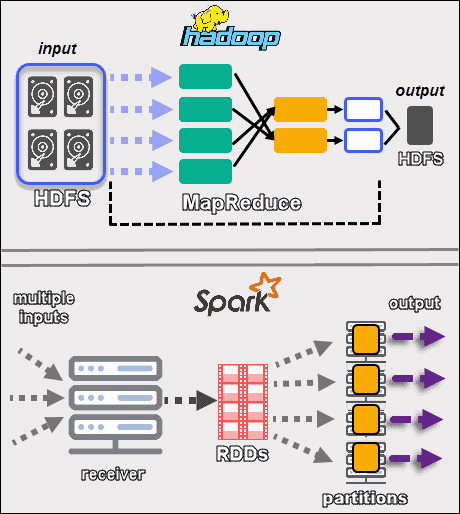

Apache Hadoop is often heralded as the trailblazer of big data processing. At its core lies the ingenious MapReduce programming model. The concept is elegantly simple yet immensely powerful: divide a complex problem into smaller, more manageable tasks that can be executed concurrently on a distributed cluster of commodity hardware. This parallel processing approach is well-suited for tackling tasks that require intensive computation, such as aggregations, sorting, and filtering.

Consider a practical example to elucidate Hadoop’s capabilities. Imagine you have a colossal log file containing web server access data. Your goal is to count the number of times each IP address accesses the server. Hadoop’s MapReduce model excels in handling such scenarios:

java

// Mapper function for IP address counting in Hadoop

public class IPAddressMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] fields = value.toString().split(“\\s+”);

String ipAddress = fields[0];

context.write(new Text(ipAddress), new IntWritable(1));

}

}

// Reducer function for IP address counting in Hadoop

public class IPAddressReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int count = 0;

for (IntWritable value : values) {

count += value.get();

}

context.write(key, new IntWritable(count));

}

}

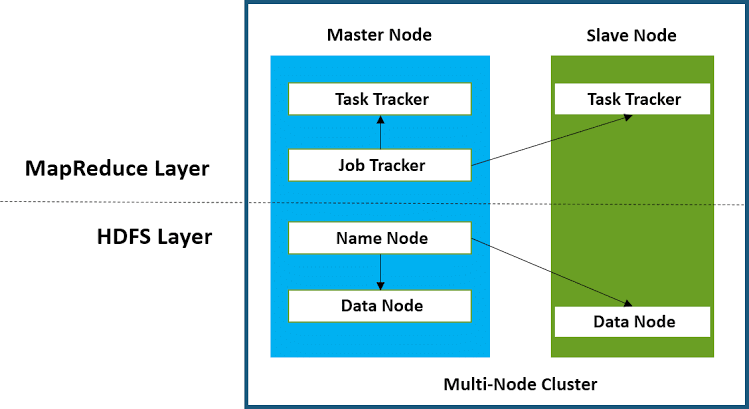

Hadoop: Distributed Data Processing

Central to Hadoop’s capabilities is its distributed file system – the Hadoop Distributed File System (HDFS). Data is divided into blocks, which are replicated across multiple nodes in the cluster to ensure fault tolerance. This architecture allows for parallel processing and efficient data access, even on large datasets.

Setting up a Hadoop Cluster: Behind the Scenes

The process of setting up a Hadoop cluster involves several steps, including configuring core files and starting the essential services. Here’s a glimpse of what the setup entails:

bash

# Format the HDFS namenode

hadoop namenode -format

# Start HDFS and YARN

start-dfs.sh

start-yarn.sh

Spark: In-Memory Data Processing

While Hadoop marked a significant leap forward, it was not without its limitations. A notable drawback was its reliance on disk-based storage and intermediate data shuffling, which could introduce performance bottlenecks for iterative algorithms. Enter Apache Spark, a framework designed to address these challenges and unlock new horizons in data processing.

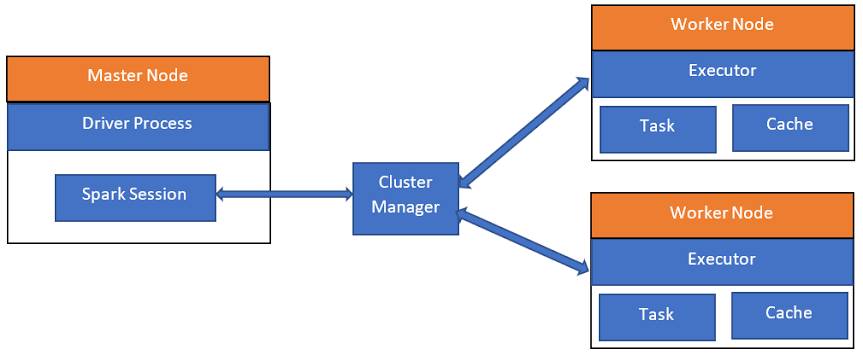

Resilient Distributed Datasets (RDDs): Igniting Spark’s Fire

At the core of Spark’s architecture is the Resilient Distributed Dataset (RDD). RDDs are a fundamental data structure that enables distributed processing with a focus on fault tolerance. Spark’s unique proposition lies in its ability to perform in-memory data processing, significantly reducing the need to read from and write to disk.

Let’s illustrate this with an example involving a sentiment analysis task. Imagine you have a vast collection of social media posts, and you want to gauge the sentiment of each post using a pre-trained model. Spark’s in-memory processing capabilities prove invaluable:

python

from pyspark import SparkContext

# Initialize SparkContext

sc = SparkContext(“local”, “SentimentAnalysisApp”)

# Load social media data

posts_rdd = sc.textFile(“social_media_posts.txt”)

# Perform sentiment analysis on each post

sentiment_rdd = posts_rdd.map(lambda post: analyze_sentiment(post))

# Count positive, negative, and neutral sentiments

sentiment_counts = sentiment_rdd.countByValue()

# Display results

for sentiment, count in sentiment_counts.items():

print(f”Sentiment: {sentiment}, Count: {count}”)

# Stop SparkContext

sc.stop()

Comparing Hadoop and Spark

While Hadoop and Spark are both stalwarts in the realm of big data processing, they cater to distinct use cases and exhibit unique strengths.

Hadoop’s MapReduce: The Batch Processing Workhorse

Hadoop’s MapReduce paradigm shines in scenarios where massive amounts of data need to be processed in a batch-oriented fashion. It excels at tasks like log analysis, ETL (Extract, Transform, Load) operations, and generating reports from historical data. The key to Hadoop’s power lies in its ability to distribute and parallelize computations, enabling the processing of vast datasets efficiently.

Spark’s In-Memory Magic: Real-time and Iterative Processing

Spark, on the other hand, offers a paradigm shift by introducing in-memory data processing. This capability revolutionizes the handling of iterative algorithms, such as machine learning and graph processing. Spark’s in-memory caching of intermediate data between transformations eliminates the need for costly disk reads and writes, leading to significantly improved performance.

Real-World Applications of Big Data Processing

The impact of big data processing is felt across diverse industries, reshaping how organizations operate, innovate, and make informed decisions.

E-Commerce: Retail giants harness big data processing to gain insights into consumer behavior, predict trends, and enhance personalized shopping experiences. Analyzing clickstream data, purchase histories, and user interactions enables businesses to tailor recommendations and marketing strategies effectively.

Healthcare: In the healthcare sector, big data processing plays a pivotal role in disease prediction, diagnosis, and treatment. By analyzing patient records, medical imaging data, and genomic sequences, healthcare providers can identify patterns, optimize treatment plans, and even predict potential disease outbreaks.

Finance: The financial industry relies heavily on big data processing to manage risk, detect fraud, and optimize investment strategies. Real-time analysis of market data, coupled with historical trends, empowers traders and analysts to make informed decisions and capitalize on opportunities.

Conclusion

The digital era has ushered in an unprecedented era of data abundance. Apache Hadoop and Apache Spark stand as pillars of modern data processing, addressing the challenges posed by massive datasets and unlocking new dimensions of analysis and insight.

By understanding the nuances of these technologies, individuals and organizations can extract meaningful patterns, uncover hidden correlations, and make data-driven decisions that drive innovation and foster growth. Whether it’s harnessing the parallel processing prowess of Hadoop or embracing the in-memory magic of Spark, the tools and principles of big data processing are indispensable in navigating the complex and exciting landscape of data-driven exploration.

Add comment