Modern cloud-native applications are usually designed as a set of microservices, which make the problem of their communication, and exposing to external clients, very important. To solve this problem two technologies appeared on the scene: Service Mesh and API Gateway.

Although they have some similarities, they are quite different things, targeting different problems of communication in a distributed software system. So, what’s the difference, when to use each, and how can you use them together? These questions are very important for DevOps teams to successfully manage and operate microservices-based applications.

Read this article by Talent500 experts to understand the two, their technicalities, and utility from DevOps perspective. Let’s begin:

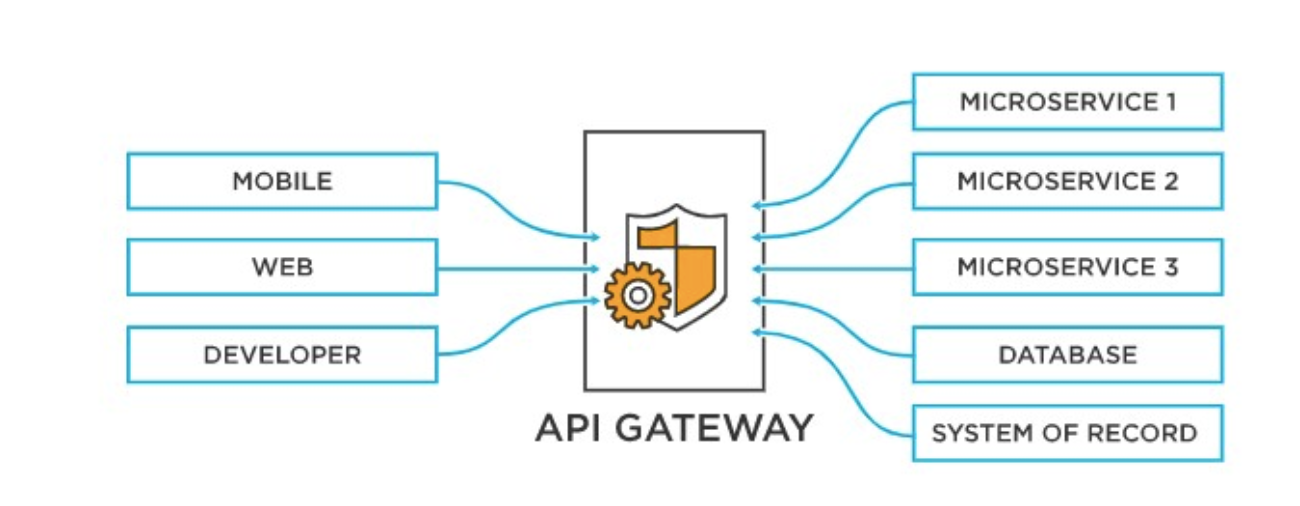

API Gateway

(Credits)

API Gateway is a reverse proxy that serves as a single entry point for all outside clients (i.e. mobile apps, web application) that want to access the services in your microservices architecture. Before API Gateways, ESBs were the de facto standard in service-oriented architectures to handle services’ communication. It is an important part of the process of managing and securing the exposure of your APIs to outside clients. The main responsibilities of API Gateway are:

Request Routing

Request routing is the process of selecting a suitable destination service for a given client request. API Gateway must be able to route the request to the appropriate backend service, according to predefined routing rules. Those rules may be based on URL paths, HTTP methods, headers or any other request attributes, or even custom logic. Such routing allows for decoupled architecture, in which clients don’t need to know anything about the actual backend services they’re consuming.

Authentication and Authorization

Authentication and authorization policies are one of the most important features that API Gateway must enforce, before allowing client requests to reach the backend services. API Gateway should support validation of API keys, JSON Web Tokens (JWT), OAuth credentials, or any other authentication mechanism. Additionally, it may apply RBAC policies, to ensure that only authorized clients can consume a particular service or resource.

Traffic Control

It’s important that backend services are not overwhelmed by excessive client requests. API Gateway should be able to apply rate limiting, throttling, and circuit breaking, to control the traffic, and prevent outages or degradation of service due to high load.

Protocol Translation

Quite often clients and backend services are using different protocols for communication (i.e. HTTP, gRPC, WebSockets). API Gateway can behave as a translator that converts requests and responses between different protocols, which allows for a more flexible and diverse technology stack.

Monitoring and Analytics

API Gateways usually come with out-of-the-box monitoring and analytics that allows DevOps teams to obtain valuable insights about APIs usage, performance (i.e. latency, error rates), existing issues or bottlenecks.

Caching

Frequently accessed resources, or those with variable data, may significantly benefit from caching mechanisms. API Gateways allow to cache responses and apply different strategies, to decide whether to use cache or not. This reduces the load on the backend services and provides faster response to the clients.

Transformation and Aggregation

Sometimes, it’s necessary for a single client request to touch multiple backend services. API Gateway can handle this by retrieving responses from different services and transforming them into a single response for the client. This simplifies client-side implementation and reduces the effort needed to create custom orchestration logic.

So API Gateway serves as a gatekeeper for exposing your microservices to outside clients, and provides security, traffic management, observability, and a uniform interface for consumers of your APIs.

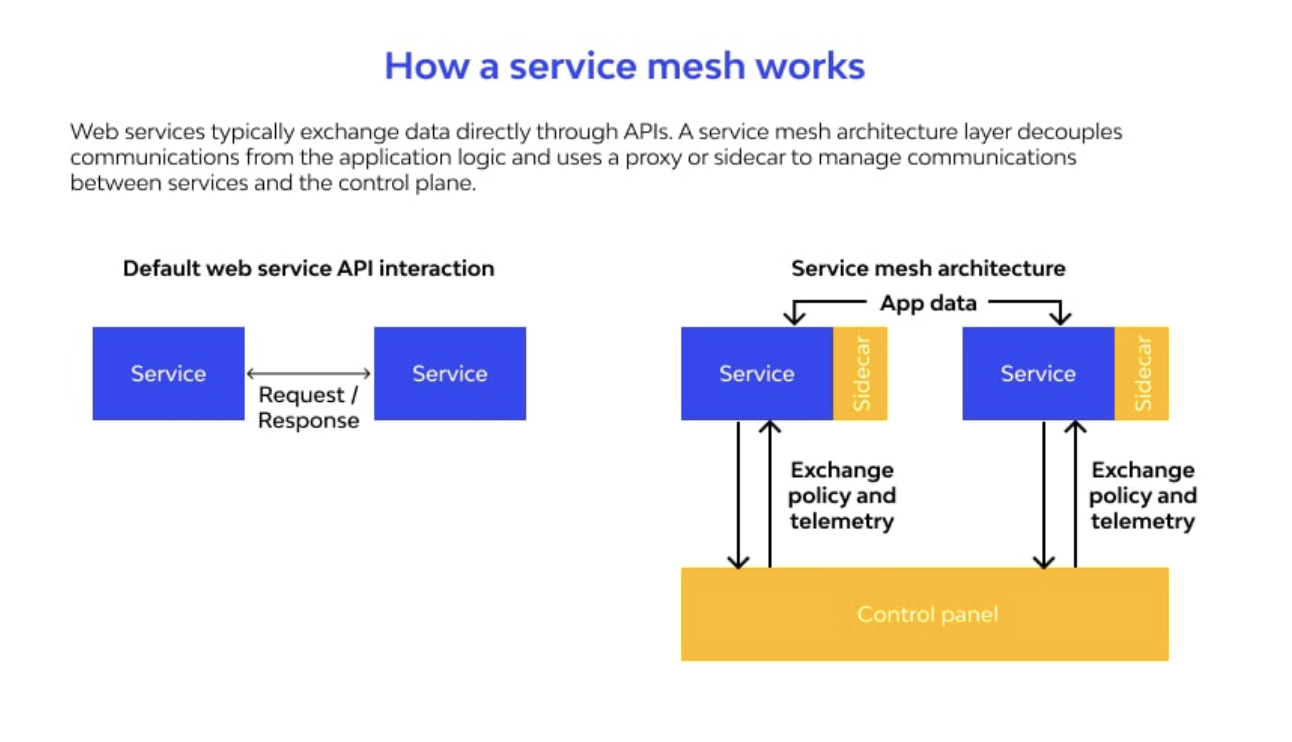

Service Mesh Control Plane

(Credits)

While API Gateway is responsible for handling client-to-service communication, Service Mesh is an infrastructure layer that manages service-to-service communication in your microservices architecture. It needs to solve the problems inherently associated with communication between distributed services, and provides secure, observable, reliable inter-service communication with dynamic service discovery.

Service Mesh is usually implemented as a set of lightweight proxies (sidecars), that are deployed alongside each instance of microservice, intercepting and handling services communication between those sidecars. Service mesh control plane manages configuration for sidecar proxies, load balancing, circuit breaking, timeouts, authentication, authorization, and deployment strategies in a service mesh infrastructure.

Service Discovery

In a microservices environment services are created, removed, scaled up and down dynamically. Service Mesh provides automatic service discovery, which allows services to locate and communicate with each other without the need to know actual network addresses or locations of the services they’re consuming. Service mesh data plane handles actual data transfer between services, enforces security policies and manages service-to-service communication.

Load Balancing

Service Mesh can distribute incoming traffic between multiple microservice instances, to achieve high availability and fault isolation. It may also apply advanced load balancing algorithms, based on different metrics (i.e. response times, resource utilization), to improve performance and resource utilization. Service mesh configuration can impact resources and optimize memory usage within a cluster environment.

Encryption and Authentication

Securing microservices communication is a vital feature, especially when services are running on different hosts or clusters. Service Mesh can provide secure communication by enabling mutual Transport Layer Security (mTLS) and service-to-service authentication and authorization.

Observability

One of the most important advantages of using a Service Mesh is the ability to obtain detailed insights about services-to-services communication patterns. It can provide detailed metrics, logs, and distributed tracing information, allowing DevOps teams to monitor health and performance of individual services and potential issues or bottlenecks.

Traffic Management

Service Mesh can apply circuit breaking, retries, and timeouts to improve resilience and reliability of services-to-services communication, similarly to API Gateway. It also may support advanced traffic management (canary releases, blue/green deployments, traffic mirroring for testing and experimentation).

Policy Enforcement

Service Mesh provides a centralized control plane for defining and enforcing policies related to security, traffic management and other aspects of your microservices. Those policies can be applied uniformly across all services, which simplifies management and ensures adherence to organizational standards.

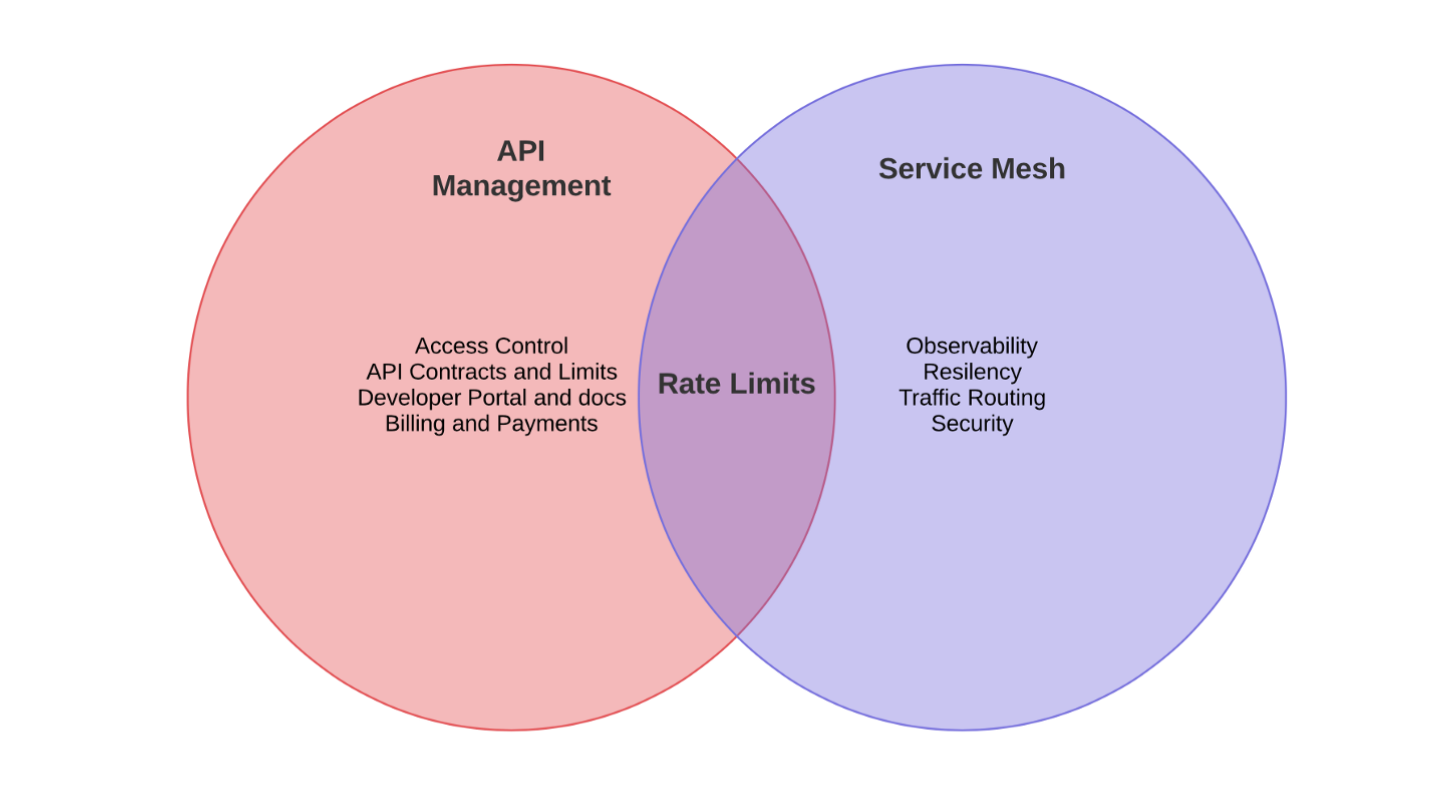

Using API Gateway and Service Mesh Together for Traffic Management

Usually in a modern, enterprise-level microservices architecture, it’s quite common and even advised to utilize both API Gateway and Service Mesh. You can build a combined solution for managing external and internal communication, which utilizes the strengths of each technology, and ensures end-to-end security, observability and traffic management across your whole application ecosystem.

Service mesh implementation is based on deploying and managing features, challenges and patterns of service mesh technologies (including alternative implementations like sidecarless eBPF). In this scenario API Gateway acts as an entry point for external clients, which consumes your microservices. It handles authentication, manages traffic and routes requests to appropriate microservices. Service Mesh manages internal communication between microservices, ensuring secure, observable and reliable services-to-services interaction.

API Gateway can communicate with Service Mesh, in order to route requests to appropriate microservices, effectively serving as a mediator between external clients and services-to-services communication layer. This brings a couple of advantages:

End-to-End Security: API Gateway enforces security policies and authentication for external clients, while Service Mesh secures services-to-services communication with mTLS and service-level authorization.

Comprehensive Observability: You can combine observability features of both API Gateway and Service Mesh, to gain complete insights about the entire application ecosystem, from external clients to services-to-services communication patterns.

Traffic Management and Resilience: The API Gateway takes care of traffic and resilience for external client requests. Meanwhile, the Service Mesh ensures reliable communication between internal services.

Separation of Concerns: By splitting external and internal communication, we achieve a more modular and maintainable architecture. This makes microservice development, deployment, and management simpler.

Scalability and Performance: The API Gateway also acts as a performance booster, using caching, load balancing, and other techniques to enhance the application’s scalability and performance.

Wrap Up

(Credits)

From a DevOps perspective, combining an API Gateway with a Service Mesh simplifies the deployment, management, and monitoring of microservices. This approach enables faster delivery, improved reliability, and better observability. DevOps teams benefit from centralized control, policy enforcement, and consistent implementation of security and traffic management. It also fosters collaboration between development and operations by providing shared visibility into the application lifecycle. The modular setup allows for independent upgrades or replacements of components without disrupting the entire system.

Experts at Talent500 find that DevOps teams can enable faster delivery cycles, improved reliability, and better observability across the entire application ecosystem using a combination of the two.

Looking for a high TC job? Stop searching and start applying on Talent500 now!

Add comment