Introduction

Apache Spark is originally written in Scala. PySpark is an open-source Python API used to interact with Apache Spark. It can efficiently handle huge amounts of data, which is particularly useful in big data processing.

This tutorial aims to provide an overview of how to get started with PySpark in 2024.

PySpark offers a relatively small learning curve if you have a basic understanding of Python and its libraries, like Pandas. By the end of this guide, you will be able to write your first lines of code in PySpark.

What is Pyspark?

PySpark is a distributed computing framework that is built on RDD (resilient distributed datasets) systems. It utilizes multiple processors to divide the workload of a task. These processors distribute the large scale tasks among themselves and execute the application.

PySpark works with clusters, where multiple computer devices act as nodes, each handling a small portion of the dataset. This approach significantly reduces the loading times of datasets.

PySpark is a popular choice for big data processing due to its scalability and ability to handle terabytes of data efficiently, which is otherwise unachievable with standard computer machines.

Features of PySpark

Some of the powerful features of PySpark are as follows:

1. Spark Architecture

The Apache Spark architecture follows a master-slave model that is primarily based on the following three distributed computing principles:

- A driver manager that runs as the master node and executes all clusters.

- RDD, a distributed file system for worker nodes (slave nodes),

- A processing engine

The Spark architecture is able to execute big data tasks without compromising on performance through in-memory computation. It optimizes Apache Spark operations by distributing and running large datasets in parallel across multiple nodes.

Spark provides in-app components to speed up tasks like data streaming, building machine learning workflows, and running data queries using SQL.

2. Distributed Computing Technology

As previously discussed, PySpark uses distributed computing, which makes it faster and thus performs better than competitors.

RDD, or Resilient Distributed Dataset, is the fundamental data structure in Spark. It supports a wide range of object types and user-defined classes.

RDDs are immutable distributed collections of objects from a dataset that are partitioned and allocated to different nodes in a Spark cluster. These clusters can run on either an internal driver or external storage, such as a shared file system.

3. Faster data processing

PySpark provides significantly faster data processing compared to contenders like Hadoop and even outperforms Pandas with its loading time.

While Apache Spark is a part of the Hadoop ecosystem, it is preferred over Hadoop due to its real-time data processing capabilities, whereas Hadoop is more ideal for batch mode processing.

4. Data recovery

RDDs, as the name implies, are fault tolerant. This allows PySpark to easily recover data in the event of runtime failures.

PySpark partitions data across multiple nodes, so lost data can be recovered from that specific node in much less time. If a task fails or gets interrupted in the middle, PySpark will automatically restart it to reduce the number of failures.

Furthermore, PySpark has a periodic checking system that saves RDD checkpoints at regular intervals of time.

5. Realtime Data Handling

One of its most powerful features is its real-time data handling capabilities, which allow PySpark to process data as soon as it is loaded into the Apache Spark Platform. It can process results as they happen.

PySpark uses a micro-batching system in which small chunks of data are processed in a continuous stream of batches.

Hadoop, on the other hand, employs batch processing, which involves processing large amounts of data in batches. This can be inefficient for large-scale businesses with complex data structures.

Applications of PySpark

- PySpark is widely used by companies such as Amazon, eBay, IBM, Hive, and Shopify to analyze large datasets (petabytes of data) on a real-time basis, which would be impossible to do locally.

- PySpark is used to train large datasets in complex machine learning workflows. It has a comprehensive API model that helps to make scalable ML algorithms.

- Every day, millions of pieces of data are produced. Big tech companies use PySpark to create scalable and resilient data storage systems.

- PySpark is used to perform day-to-day tasks such as data analysis, data transformation, manipulation, and ETL processes quickly.

Apache Spark vs PySpark: Key Differences

| Apache Spark | PySpark |

| -> Apache Spark is written in Scala.

-> It is an extensive data processing framework. -> It has a steep learning curve. -> It requires familiarity with Scala. -> It works as a processing engine for big data in parallel systems. -> Spark is primarily used to handle complex and large-scale data analysis. -> It has a large and active community of users. |

-> PySpark is written in Python.

-> It is a Python API for Spark that provides a user-friendly interface for engineers. -> It is easy to use and get started with. -> It requires a basic knowledge of Python. -> It works as an API model for Spark to collaborate and work with Python. -> Pyspark is used for quick data analysis and manipulation tasks. -> It has a relatively smaller community. |

Components of PySpark

PySpark runs on a distributed data processing system. It runs parallel jobs with the help of its components to execute and speed up the data processing. The Spark architecture includes:

1. Spark core

The Spark core is the backbone of Spark’s architecture, and all the other components are built on top of it. Spark Core interacts with APIs that define RDDs and data frames. It works on the initial execution of Apache Spark and is in charge of critical processes like memory and storage management, task scheduling, and fault recovery.

2. Spark SQL

Spark SQL enables data engineers to work with structured and semi-structured data via SQL (Structured Query Language). Spark SQL is primarily used for data manipulation through ETL (Extract, Transform, and Load) operations. It offers powerful insights into historical data. Spark SQL allows you to access and query data from MySQL tables, Hive tables, CSV, JSON, and Parquet.

3. Spark Streaming

Spark provides scalable and fault-tolerant live data streaming for large amounts of data. RDD uses micro-batching, which involves transforming small chunks of data in batches to generate live streams of log files from a web source.

Spark uses a TCP port to read and process incoming data.

4. Spark ML

Spark has an inbuilt machine learning API called MLlib, which includes common ML algorithms such as regression, classification, clustering, and collaborative filtering. MLlib is used to manage data pipelines.

In Spark, your data is spread across multiple clusters, making data management difficult. ML algorithms such as hierarchical clustering and K-means can improve Spark’s distributed computing by identifying patterns and correlations between data points.

5. GraphX

With the GraphX library, Spark can also be used to analyze graphical data. A graph consists of edges and vertices. In essence, every data point is represented by a vertex, and an edge is a line that connects any two vertices.

GraphX provides graph based operations and algorithms like clustering and classification for plotting these data points and finding relationships between them.

PySpark Installation: Step-by-Step

In this tutorial, we will run PySpark on a Windows machine. There are several ways to install PySpark. The first option is to manually install and configure Apache Spark on your operating system. This requires some prerequisites, like downloading Python and Java. You will also need to set up some environment variables because Spark, which is written in Scala, runs on the Java Virtual Machine (JVM). For someone who is just starting out, this process can be a little intimidating. For a comprehensive guide, you can follow this tutorial on the official Apache Spark website.

For now, we will use Jupyter notebooks to set up a virtual environment for PySpark.

Installation

- pip is a Python package installer that allows you to install, upgrade, and manage software packages from the web. If you have not already installed pip, you can get it from here.

- Using the command prompt, install PySpark:

| pip install pyspark |

- The Anaconda distribution comes with powerful data science libraries like NumPy, Pandas, TensorFlow, and Scikit-learn. With Anaconda, you can build multiple isolated environments for your Python applications. Download it here.

- Anaconda has a package manager called conda. To begin, we will set up a virtual environment for PySpark using conda:

| conda create –name pyspark_env python=3.10 -y # activate the pyspark environment: conda activate pyspark_env |

Anaconda offers jupyter notebooks as an IDE for writing and visualizing code locally. Let us install it as well.

| conda install -c conda-forge jupyter |

Finally, we can open a new Jupyter notebook from the terminal:

| jupyter notebook |

Initialize a Spark Session

Before you can write any code in Spark, you first need to start a SparkSession. This is the primary step for any Spark application.

To initialize a SparkSession in PySpark, use the following code in your Jupyter notebook:

| from pyspark.sql import SparkSession spark = SparkSession.builder.appName(“pyspark_app”).getOrCreate() |

We have just created a spark object that can be used in our PySpark application to run data queries. This variable will store all of the session data.

Running your first command

After initializing the SparkSession, we will import a few helpful functions from the pyspark.sql.functions module:

| from pyspark.sql.functions import col, when |

This will help us perform data transformation operations on our dataframe columns.

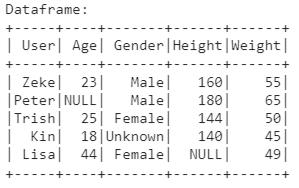

1. Creating a dataframe

First, let’s create a simple list that contains basic information like the name, gender, age, height, and weight of some patients.

| data = [ (“Zeke”, 23, “Male”, 160, 55), (“Peter”, None, “Male”, 180, 65), (“Trish”, 25, “Female”, 144, 50), (“Kin”, 18, “Unknown”, 140, 45), (“Lisa”, 44, “Female”, None, 49), ] |

As you can see, there are some missing and unknown values, which will be addressed later.

We will convert this list into a PySpark dataframe, which is more structured and allows for data manipulation and analysis.

| df = spark.createDataFrame(data, [“User”, “Age”, “Gender”, “Height”, “Weight”]) print(“Dataframe:”) df.show() |

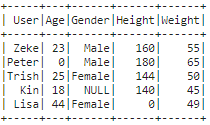

2. Data Cleaning

To handle missing and inconsistent values in our dataframe, we will use:

- fillna(): to replace missing columns with 0.

- withColumn(): to update ‘Unknown’ value columns with null.

| df_clean = df.fillna({“Age”: 0, “Height”: 0, “Weight”: 0}).withColumn( “Gender”, when(col(“Gender”) == “Unknown”, None).otherwise(col(“Gender”)) ) df_clean.show() |

3. Data Transformation

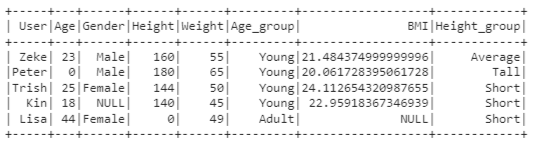

Let us gain some insight into our data by adding some new columns through data manipulation and transformation:

| # Age category df_transform = df_clean.withColumn(“Age”, col(“Age”).cast(“int”)).withColumn( “Age_group”, when(col(“Age”) < 30, “Young”).otherwise(“Adult”) ) |

The ‘Age’_group column labels patients as “young” or “adult” based on their age.

| # BMI df_transform = df_transform.withColumn( “BMI”, col(“Weight”) / ((col(“Height”) / 100) ** 2) ) |

The BMI column calculates the Body Mass Index for each record.

| # Height category df_transform = df_transform.withColumn( “Height_group”, when(col(“Height”) < 160, “Short”) .when((col(“Height”) >= 160) & (col(“Height”) < 170), “Average”) .otherwise(“Tall”), ) |

Based on height ranges, the patients are categorized into ‘Short’, ‘Average’, or ‘Tall’ groups in the ‘Height_group’ column.

| df_transform.show() |

The new dataframe provides additional insights into the patient data.

This was a brief tutorial on how to perform data analysis with PySpark.

PySpark is used to build end to end machine learning and data pipeline projects. If you want to learn more about PySpark, you can start by reading the official documentation here.

Add comment