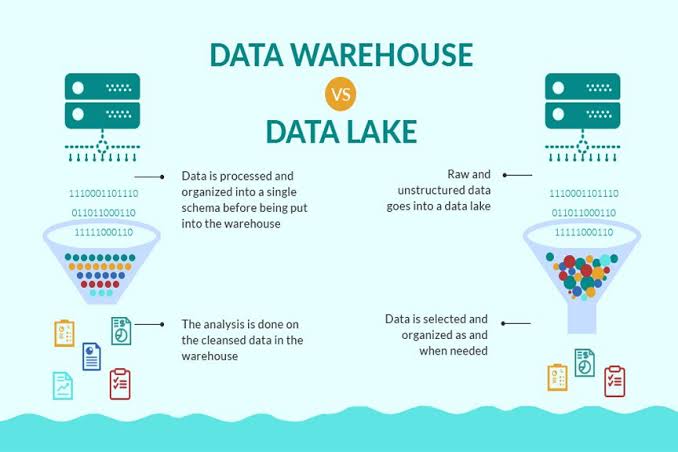

In the rapidly evolving landscape of data management, choosing the right storage solution is crucial for businesses seeking to harness the power of their data effectively. Two prominent contenders in this space are Data Lakes and Data Warehouses. Wondering how to go about choosing out of these? Let us dive into both of these, explore their characteristics, use cases, integration challenges, security measures, and cost considerations in this blog.

An Overview of Data Lake

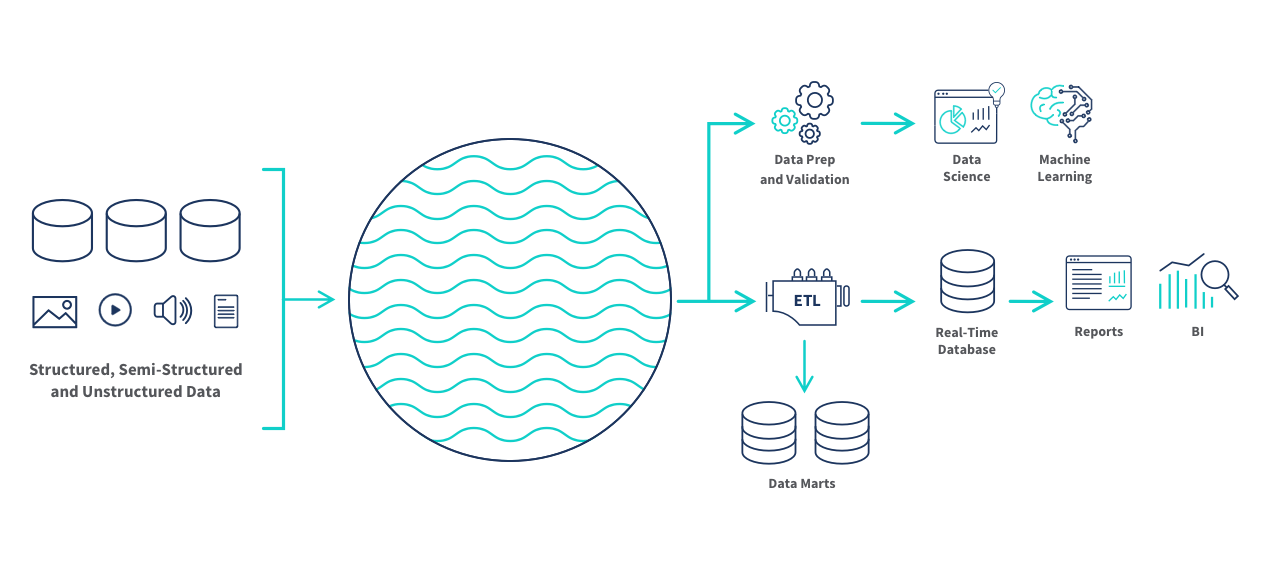

A Data Lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. Unlike traditional databases, Data Lakes accommodate raw, unprocessed data, making them a versatile storage solution for big data and analytics. The primary purpose of a Data Lake is to provide a single source of truth for all types of data.

A Data Lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. Unlike traditional databases, Data Lakes accommodate raw, unprocessed data, making them a versatile storage solution for big data and analytics. The primary purpose of a Data Lake is to provide a single source of truth for all types of data.

Key Characteristics and Features

Scalability: Data Lakes can scale horizontally, accommodating vast amounts of data seamlessly.

Flexibility: They support various data types, from structured to semi-structured and unstructured data.

Cost-Effective Storage: Data Lakes offer cost-effective storage solutions, particularly for large volumes of raw data.

Examples:

Companies like Netflix and Uber utilize Data Lakes to store and analyze massive datasets, including user logs, customer interactions, and operational data.

Pros and Cons:

Pros: Versatility, scalability, cost-effectiveness.

Cons: Complexity in data governance, potential for data sprawl.

An Overview of Data Warehouses

A Data Warehouse, on the other hand, is a relational database optimized for analysis and reporting. It focuses on storing structured data and is designed for query and analysis rather than transaction processing. The primary purpose of a Data Warehouse is to provide a consolidated view of historical data from different sources.

Key Characteristics and Features

Structured Data Support: Data Warehouses excel in handling structured data, providing a consistent and organized format.

Query Performance: They are optimized for complex queries and reporting, making them ideal for business intelligence.

Data Aggregation: Data Warehouses often involve the aggregation of data for faster query results.

Examples:

Companies like Amazon and Walmart use Data Warehouses to store and analyze transactional data, customer information, and sales data for strategic decision-making.

Pros and Cons:

Pros: Fast query performance, optimized for structured data analysis.

Cons: Limited scalability for very large datasets, may not handle unstructured data efficiently.

Use Cases and Scenarios:

Data Lakes Scenarios

Data Lakes are ideal for scenarios requiring the storage of raw, unstructured data. Consider a use case where a company collects and stores log files from various applications for future analysis.

python

# Example Python code for storing log files in a Data Lake

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName(“DataLakeExample”).getOrCreate()

# Read log files

logs = spark.read.text(“s3://datalake/logs/*.log”)

# Store raw log files in Data Lake

logs.write.parquet(“s3://datalake/raw_logs”)

Data Warehouses Scenarios

Data Warehouses shine when there’s a need for structured data analysis, such as running complex SQL queries for business intelligence purposes.

sql

— Example SQL query for aggregating sales data in a Data Warehouse

SELECT

product_category,

SUM(sales_amount) AS total_sales

FROM

sales_data

GROUP BY

product_category;

Integration Challenges

Challenges of Integrating Data Lakes:

Integrating Data Lakes into existing infrastructures can be challenging due to their flexibility. Ensuring data quality, metadata management, and governance becomes crucial.

python

# Example Python code for integrating Data Lake with a data processing pipeline

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName(“DataLakeIntegration”).getOrCreate()

# Read raw data from Data Lake

raw_data = spark.read.parquet(“s3://datalake/raw_data”)

# Apply transformations

processed_data = raw_data.filter(“column_a > 0”)

# Write processed data to Data Warehouse

processed_data.write.jdbc(url=”jdbc:postgresql://your-database-url”, table=”processed_data”, mode=”overwrite”)

Data Warehouse Integration Strategies

Data Warehouses often integrate seamlessly with existing business intelligence tools and frameworks, but challenges can arise when handling diverse data sources.

sql

— Example SQL query for joining data from different sources in a Data Warehouse

SELECT

customers.customer_name,

orders.order_date,

order_details.product_name

FROM

customers

JOIN

orders ON customers.customer_id = orders.customer_id

JOIN

order_details ON orders.order_id = order_details.order_id;

Section 5: Security and Governance

Security Measures in Data Lakes:

Data Lakes require robust security measures to protect sensitive information. Access controls, encryption, and auditing are essential components.

python

Copy code

# Example Python code for implementing access controls in a Data Lake

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName(“DataLakeSecurity”).getOrCreate()

# Define access control policies

access_control_list = {

“read”: [“analyst_group”],

“write”: [“data_engineer_group”]

}

# Apply access controls to Data Lake

data_lake.applyAccessControls(access_control_list)

Governance and Compliance in Data Warehouses

Data Warehouses focus on maintaining data integrity and ensuring compliance with industry regulations. Version control and audit trails are common governance practices.

sql

— Example SQL query for creating an audit trail in a Data Warehouse

CREATE TABLE audit_trail (

operation_type VARCHAR(20),

table_name VARCHAR(50),

timestamp TIMESTAMP,

user_id INT

);

— Trigger to log changes to the audit trail

CREATE TRIGGER log_changes

AFTER INSERT ON sales_data

FOR EACH ROW

INSERT INTO audit_trail (operation_type, table_name, timestamp, user_id)

VALUES (‘INSERT’, ‘sales_data’, CURRENT_TIMESTAMP, CURRENT_USER);

Cost Considerations

Cost Structure for Data Lakes:

Data Lakes offer cost-effective storage solutions, but costs can accumulate with data processing and retrieval. Optimizing storage and utilizing serverless computing can help manage expenses.

python

# Example Python code for optimizing storage costs in a Data Lake

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName(“DataLakeCostOptimization”).getOrCreate()

# Compact data before storage

compacted_data = raw_data.coalesce(1)

# Write compacted data to Data Lake

compacted_data.write.parquet(“s3://datalake/optimized_data”)

Comparative Analysis of Data Warehouse Costs

Data Warehouses may incur higher costs for storage and query processing. Utilizing data compression and partitioning can help optimize costs.

sql

— Example SQL query for optimizing storage in a Data Warehouse

CREATE TABLE sales_data_partitioned

PARTITION BY (year, month)

AS

SELECT

*

FROM

sales_data;

— Example SQL query for using columnar storage in a Data Warehouse

CREATE TABLE sales_data_columnar

WITH (storage_format = ‘columnar’)

AS

SELECT

*

FROM

sales_data;

Conclusion

In the ever-expanding realm of data storage solutions, the choice between Data Lakes and Data Warehouses is pivotal. Each has its strengths and weaknesses, catering to specific business needs. Understanding the nuances of these storage solutions from their use cases to integration challenges, security measures, and cost considerations empowers organizations to make informed decisions aligned with their data management strategies. As technology continues to evolve, so too will the landscape of data storage solutions, making it imperative for businesses to stay abreast of developments and adopt solutions that best serve their unique requirements.

Add comment