Pods in Kubernetes are containers that share a node’s resources (CPU, RAM). However, the optimal size of the pod is a frequent topic of discussion and dispute. Kubernetes employs a request and limit system to establish pod capacity.

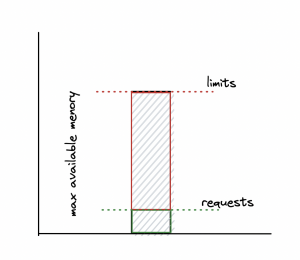

Where do you stand with your request? Keep in mind that the computers we use to run the software we purchase have to meet certain requirements. Similarly, the Request specifies the bare minimum of resources required to launch the pod. What are ‘limits’? Pods will be throttled and restarted if they exceed the limit set on their available resources. In other words, you should never make your Kubernetes pods bigger than they need to be.

Quite a bit goes into it. We’ll be diving deep into the concepts of requests and limits, as well as discussing how to optimally define these parameters.

What happens when you set requests and limits on your pod?

When you are configuring a Pod, you have the option of specifying the quantity of each resource that a container requires. Although there are other resources to specify, the central processing unit (CPU) and random access memory (RAM) are the most frequent ones.

When you specify the resource request for the containers that are included within a Pod, the kube-scheduler utilizes this information to determine which node the Pod should be placed on. When you set a resource limit for a container, the kubelet will enforce that restriction. This means that the container that you have started running will not be able to utilize more of that resource than the limit that you have specified for it. Additionally, the kubelet will set aside at least the requested quantity of the system resource solely for the utilization of the container.

Requests and limits

Each container that makes up a pod has the ability to have one of two distinct kinds of resource configurations applied to it. They take the form of requests and limits. The bare minimum quantity of resources that containers must have is specified by requests. This is the value you should put in the request if you think your application needs at least 256 MB of RAM to work well. Kubernetes makes sure that the container always has at least 256 megabytes of space, even if the program uses more than that.

Limits, on the other hand, determine the maximum amount of a resource that the container is able to use. It’s possible that your application needs at least 256 megabytes of memory, but you should check to make sure it doesn’t use more than 1 gigabyte of memory in total. You can spend no more than that. Keep in mind that your application will always have at least 256 MB of memory, but it could have up to 1 GB of memory. Once that happens, Kubernetes will either stop it or slow it down.

Resource Types

The resource type specified in requests and limits is CPU and memory(RAM)

resources:

requests:

cpu: ’50m’

memory: ‘120M’

limits:

cpu: ’50m’

memory: ‘150M’

Together, the CPU and memory are called compute resources, or just resources. Compute resources are quantities that can be asked for, given out, and used up. They’re not the same as API resources. The Kubernetes API server lets you read and change objects, like Pods and Services, that are API resources.

Resource requests and limits of Pod and container

For each container, you can specify resource limits and requests, including the following:

- spec.containers[].resources.limits.cpu

- spec.containers[].resources.limits.memory

- spec.containers[].resources.limits.hugepages-<size>

- spec.containers[].resources.requests.cpu

- spec.containers[].resources.requests.memory

- spec.containers[].resources.requests.hugepages-<size>

Even though you can only define requests and limitations for individual containers, it is still helpful to think about the overall resource requests and limits for a Pod. This is because Pods can include several containers. A resource request or limit for a Pod is equal to the sum of the resource requests or limits of that type for each container that makes up the Pod when it comes to a certain type of resource.

Resource units in Kubernetes

CPU Units

Imagine you have a single core machine and you want to run 2 containers/pods in it. Ideally, you may want to equally divide the CPU. But how do you divide a single core? FOr, thi understanding Kubernetes CPU units are very important.

Each core comprises 1000 millicores. Basically, Requests can be made in parts. When you set spec.containers[].resources.requests.cpu to 0.5, you define a container asking for half as much CPU time as a single core machine.

For CPU resource units, the expression 0.1 is the same as the expression 100m, which can be read as “one hundred millicpu.” People sometimes say “one hundred millicores,” which is the same thing.

Memory Units

Bytes are used to measure limits and requests for memory. Memory can be written as either a simple integer or a fixed-point number by adding one of these suffixes: E, P, T, G, M, k. You can also use Ei, Pi, Ti, Gi, Mi, and Ki, which are the power-of-two equivalents. For instance, the following have about the same value:

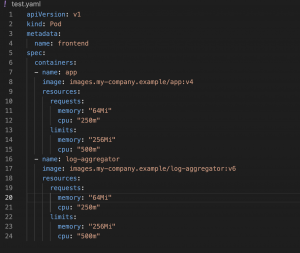

Container resources example

This following Pod holds two containers. Allotted resources of 0.25 CPU and 64MiB (226 bytes) of memory are specified for both containers during their definition. Limits of 0.5 CPU and 256 MiB of memory are placed on each container. That is, the Pod can use up to 1 CPU and 256MiB of memory, yet it requests only 0.5 CPU and 256MiB.

What happens behind the scene when you set requests and limits?

Kubernetes scheduler chooses a node to run a Pod when it’s created. Each node has a CPU and memory limit for Pods. The scheduler ensures that the sum of scheduled containers’ resource requests is less than the node’s capacity. Despite low memory or CPU consumption on nodes, the scheduler won’t put a Pod if the capacity check fails. This prevents a node from running out of resources during a request peak.

The Linux container runtime normally sets up kernel cgroups to apply and enforce the limits you specified.

The CPU limit specifies the maximum amount of CPU time that a container can utilize. During each scheduling interval (time slice), the Linux kernel verifies that this limit has not been surpassed; if it has, the kernel checks before allowing the cgroup to continue execution. Workloads with bigger CPU demands are given more CPU time on a congested system than those with minor requests.

If the container tries to allocate more memory than this limit, the Linux out-of-memory subsystem activates and stops one of the processes. If PID 1 is restartable, Kubernetes restarts the container. When a container’s memory use exceeds its allocated amount and the node it is running on runs out of memory altogether, the Pod to which the container belongs may be evicted. The CPU usage of a container may or may not be permitted to become steadily larger than its allocated limit. In contrast, container runtimes do not kill Pods or containers if they consume too much CPU.

How to determine if you are using correct requests and limits?

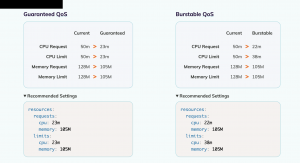

There are ways to determine if your pod is hitting the limits. But it’s only when you have already configured it. You can always do load testing/stress testing on your pod to know, if you know your resource request/limit. In order to do this properly, you need to pay attention to two distinct aspects. If the requests are too low, a normally performing application will appear to be using excessive amounts of memory and CPU, and Kubernetes will terminate it. That will make your apps incredibly shaky. It could lead to downtime. But an ideal request is hard to find. Fairwinds’ Goldilocks tool helps organizations distribute Kubernetes resources just right.

The Goldilocks dashboard makes VPA objects and serves the recommendations through a web interface. The VPA controller stack has a recommendation engine that tells you what to do based on how your pods are using their resources right now. The main goal of VPA is to actually set those for you, but we aren’t happy with how it does this right now. In particular, we like to run Horizontal Pod Autoscaler, which doesn’t work well with VPA. Instead, we just use VPA’s recommendation engine to get good advice on how to set your requests and limits for resources. So, now you know the recommendations are perfectly dependable.

How to install Goldilocks?

The easiest way to install Goldilocks is to use a Helm chart. You can do this by running:

helm repo add fairwinds-stable https://charts.fairwinds.com/stable

helm install –name goldilocks –namespace goldilocks –set installVPA=true fairwinds-stable/goldilocks

This will install the VPA, Goldilocks controller, and dashboard. Port forwarding lets you access the dashboard.

kubectl -n goldilocks port-forward svc/goldilocks-dashboard 8080:80

Conclusion

Adding Requests and Limits is highly critical to your Kubernetes workloads. If your pods are as large in size as the nodes, then containerization is pointless and you should be aware of the capacity being held by each pod. Decisions on cluster management, including whether or not to increase the number or size of nodes, are aided by this kind of monitoring.

But, it’s difficult to define requests and limitations in containers. Without using a tried and true scientific model to extrapolate the facts, getting them properly can be a huge challenge. By combining a metrics server with a Vertical Pod Autoscaler (VPA), you may eliminate estimation errors when making requests and set firm limitations. You can use Goldilocks which uses VPA and suggests you requests and limits for your workloads.

If you go by this, you can rest assured that your Pods will always have the appropriate requests and limitations, as they will be dynamically updated and changed.

Add comment