Deep learning has completely changed how we manage and analyze real-world data. There are many kinds of deep learning applications, such as those that can arrange a user’s photo library, suggest books, spot fraud, and understand the environment around an autonomous vehicle.

Here’s how to utilize AWS Lambda and your own specially trained models to take advantage of a scaled-down serverless computing method. We’ll walk you through some of the fundamental AWS services that you may use to execute your serverless inference during this process.

We’ll explore image classification: There are several effective open-source models out there. Convolutional neural networks and fully connected neural networks, two of the most popular network types in deep learning, can be utilized for image categorization.

We’ll demonstrate where to put your trained model in AWS and how to package your code so that it may run when an inference command is sent via AWS Lambda.

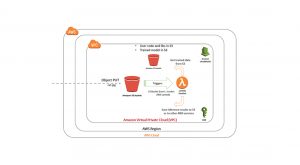

Overall Architecture

AWS Architecture

Deep learning system development and deployment should be like building and deploying conventional software solutions from a process standpoint.

The illustration that follows shows one potential development life cycle:

The figure shows the many steps that the typical software development process goes through, from the conception of an idea through the final deployment of models in production. The development phase often involves a lot of fast iterations that call for frequent modifications to the surrounding environment. Typically, this would affect the kind and amount of resources utilized while developing software or models. Agile development requires the capacity to swiftly construct, rebuild, and destroy the environment. Changes to the infrastructure should come after quick changes in the software that is developed.

The ability to manage infrastructure via code also known as IaC: infrastructure as code is one of the prerequisites for agile development and accelerated innovation.

The Continuous Integration and Continuous Delivery (CI/CD) methodology include the automation of software design management, building, and deployment. Although the specifics of well-organized CI/CD pipelines won’t be covered in this piece, every DevOps team looking to create repeatable procedures that promote process automation and development/deployment agility should keep them in mind.

AWS provides a range of tools and services that simplify community development tasks. It is possible to rapidly and easily copy an environment generated using automation code, for example, to construct staging and production systems from the template of the development environment.

Additionally, through fully managed services like streaming, batching, queueing, monitoring and alerting, real-time event-driven systems, serverless computing, and so many others, AWS significantly simplifies the design of complex solutions using a variety of computer science and software engineering concepts. We’ll investigate serverless computing for deep learning, which may spare you the hassle of managing and providing servers. Data scientists and software developers won’t have to worry about needless complexity like making sure to have the adequate processing power or making sure to retry upon system failures because these duties will be carried out by AWS services.

We will focus on a staging-like environment that mimics a production system.

Amazon S3-based use case

We’ll replicate the process of storing an image in an Amazon Simple Storage Service (S3) bucket for this use case. The S3 bucket where objects are stored can broadcast an object PUT event to the rest of the AWS Cloud ecosystem. The majority of the time, either an AWS Lambda function or an Amazon Simple Notification Service (SNS) notification mechanism is utilized to activate user codes. We’ll utilize a Lambda function trigger on an S3 object PUT event for simplicity. We are dealing with some complex ideas, as you may have observed, with very little actual work being done by a scientist or developer.

The Python TensorFlow-based trained machine learning model is stored in an S3 bucket. We’ll upload a photo to any bucket with bucket event alerts enabled as part of our scenario. These S3 bucket notification events will be subscribed to by our Lambda function.

For this post, all cloud infrastructure will be built using AWS CloudFormation, which provides a fast and flexible interface to create and launch AWS services. This can also be done manually using the AWS Management Console or using an AWS Command Line Interface (AWS CLI), as another method for designing and launching AWS resources.

Deep learning code

For this article, AWS CloudFormation, which offers a quick and flexible interface to develop and deploy AWS services, will be used to build all cloud infrastructure. Another technique for creating and deploying AWS resources is by manually using the AWS Management Console or an AWS Command Line Interface (AWS CLI).

For Image classification, let’s look at deploying a powerful pre-trained Inception-v3 model.

Inception-v3 Architecture

The Inception-v3 architecture seen here uses color to denote the different layer kinds. It is not necessary for you to comprehend every aspect of the model. The fact that this is a very deep network that would take an absurd amount of time and resources (data and computing) to train from start is crucial to keep in mind.

Create a Python 2.7 virtualenv or an Anaconda environment and

Install TensorFlow for CPU.

Locate the classify_image.py in the root of the zip file and execute in your shell:

python classify_image.py

This will download an Inception-v3 model that has already been trained and use a sample picture (of a panda) to validate the implementation.

It will create the following similar structure:

classify_image.py

imagenet/

—-classify_image_graph_def.PB

—-imagenet_2012_challenge_label_map_proto.pbtxt

—-imagenet_synset_to_human_label_map.txt

—-LICENSE

Create a bundle with this model file and the necessary compiled python packages that AWS Lambda can execute.

Because the model file is always huge, we will need to load it from Amazon S3 during AWS Lambda inference execution. You may have noticed that we moved model downloading outside of the handler method in the given inference code (classify image.py). We do this to benefit from AWS Lambda container reuse. Any code run outside of the handler function will be invoked just once during container construction and retained in memory across calls to the same Lambda container, resulting in speedier subsequent calls to Lambda.

An AWS Lambda pre-warming action is when you start AWS Lambda ahead of time before your first production run. This prevents a possible problem with an AWS Lambda “cold start,” in which huge models must be loaded from S3 on each “cold” Lambda instantiation. After AWS Lambda is up and running, it is best to keep it warm to ensure a quick response for the next inference run. If AWS Lambda is enabled once every few minutes, even if it is through a ping notice, it will keep it warm when Lambda needs to conduct an inference task.

At this point, you need to zip all necessary packages and the code together. Normally, all required packages must be compiled on an Amazon Linux EC2 instance before being used with AWS Lambda.

Deploying with AWS Lambda

To get started, here are the steps to get you started:

- Download the DeepLearningAndAI-Bundle.zip.

- Unzip and copy the files into your Amazon S3 bucket. We’ll call this dl-model-bucket. the folder contains everything you will need to run the demo like:

- classify_image.py

- classify_image_graph_def.PB

- deep learning-bundle.zip

- DeepLearning_Serverless_CF.json

- Image 1 for testing

- Image 1 for testing

- To create all necessary resources in AWS Run a CloudFormation script that includes your test S3 bucket, let’s call it deep learning-test-bucket (it will ask for another name if this bucket name is taken).

- An Image should be Uploaded to your test bucket.

- Go to Amazon Cloud Watch Logs for your Lambda inference function and validate inference results.

To run the CloudFormation script here is a step-by-step guide:

- Choose the Create new stack button on the AWS CloudFormation console

- Provide the link to the cloud formation script(JSON) at the ‘Specify an Amazon S3 template URL’

- Choose Next.

- Put the values needed for the CloudFormation Stack name, the code, the test bucket name, and the bucket where you have the model.

- Choose Next.

- Skip the Options page.

- Go to the Review page.

- Accept the acknowledgment at the bottom of the page.

- Choose the Create button.

- Status will be changed to CREATE_IN_PROGRESS and shortly after completing it will show CREATE_COMPLETE status.

The CloudFormation script has built the whole solution, including the AWS Identity and Access Management (IAM) role, DeepLearning Inference Lambda function, and S3 bucket permissions to activate the Lambda function when the object is PUT into the test bucket.

The new DeepLearning Lambda function may be found in the AWS Lambda service. AWS CloudFormation has properly set all of the required parameters and has included the environment variables required for inference.

There should have been a new test bucket generated. Upload an image to the previously created S3 bucket: deep learning-test-bucket to put our deep learning inference capabilities to the test.

Getting inference results

To run the code, simply upload any picture to the Amazon S3 bucket generated by the CloudFormation script. This would activate our inference Lambda function.

The example below shows how to submit a test picture to our test bucket.

The S3 bucket has now activated our inference Lambda function. Choose Monitoring, then View Logs from the AWS Lambda service screen in the console. When working with inference results, keep in mind that they should be saved in one of our persistent stores, such as Amazon DynamoDB or Amazon OpenSearch Service, where you can simply index photos and related labels.

As long as the container is still “warm,” subsequent calls will merely perform inference (the model is already in memory), decreasing overall runtime. The screenshot below depicts consecutive Lambda function runs.

Tips for deep learning in a serverless environment

Using a serverless environment allows you to simplify the deployment of your development and production code. The steps for packaging your code and its dependencies differ depending on the runtime environment, so study them thoroughly to avoid problems during first-time deployment. Python is a popular programming language used by data scientists to create machine learning models. Python libraries that rely on historical C and Fortran should be created and deployed on Amazon Elastic Compute Cloud (EC2) using an Amazon Linux Amazon Machine Image (AMI) to maximize performance when running on AWS Lambda (whose built files can then be exported). This has previously been done in this blog article. You are free to use those libraries to create your final deployment package. Furthermore, pre-compiled Lambda packages are commonly available online.

Model training often necessitates intense calculations and the usage of costly GPUs in specialized hardware. Fortunately, inference requires far less computing power than training, allowing for the utilization of CPUs and the usage of the Lambda serverless computational architecture. If you need GPU for inference, you may use container services like Amazon ECS or Kubernetes to have additional control over your inference environment.

As you shift to serverless architectures for your project, you’ll quickly discover about the benefits and drawbacks of this style of computing:

- Serverless significantly simplifies the usage of computing infrastructure, avoiding the complexity of managing a VPC, subnets, security, building and deploying Amazon EC2 servers, and so on.

- AWS handles capacity for you.

- Exception handling – AWS handles retries and storing problematic data/messages in Amazon SQS/Amazon SNS for later processing.

- All logs are collected and stored within Amazon CloudWatch Logs.

- Performance can be monitored using the AWS X-Ray tool.

If you intend to process bigger files/objects, employ an input stream technique rather than putting the full material into memory. It often handles extremely big files (up to 20 GB) using an AWS Lambda function and typical file/object streaming methodologies. Use compression to optimize network I/O because it will dramatically reduce file/object size in most circumstances (especially with CSV and JSON encoding).

Debugging a Lambda function differs from standard local host/laptop-based development. Instead, we recommend that you first create your local host code (which should be simple to debug) before promoting it to a Lambda function. To transition from a laptop environment to AWS Lambda, only a few minor changes are required, such as:

- Replacing the use of local file references.

- Reading environment variables

- Printing to console

- Debugging

- Runtime instrumentation

Deploying to AWS Lambda is simple if your code and dependencies are contained in a single ZIP file. CI/CD tools may make this phase easier and, more importantly, totally automated. When code changes are recognized, a CI/CD pipeline will automatically build and deploy the output to the desired environment as soon as possible, based on your setup. AWS Lambda also supports versioning and aliasing, allowing you to swiftly swap between multiple iterations of your Lambda function. This might be useful if you operate in many contexts, such as research, development, staging, and production. When you publish a version, it becomes immutable, preventing unwanted modifications to your code.

Add comment